Non-unicode encodings discussion

-

I’m not sure how far I want to take this, because I’m typically not interested in encodings other than UTF-8, but I’m trying to understand a bit more about how Notepad++ treats them. Let’s see how this goes…

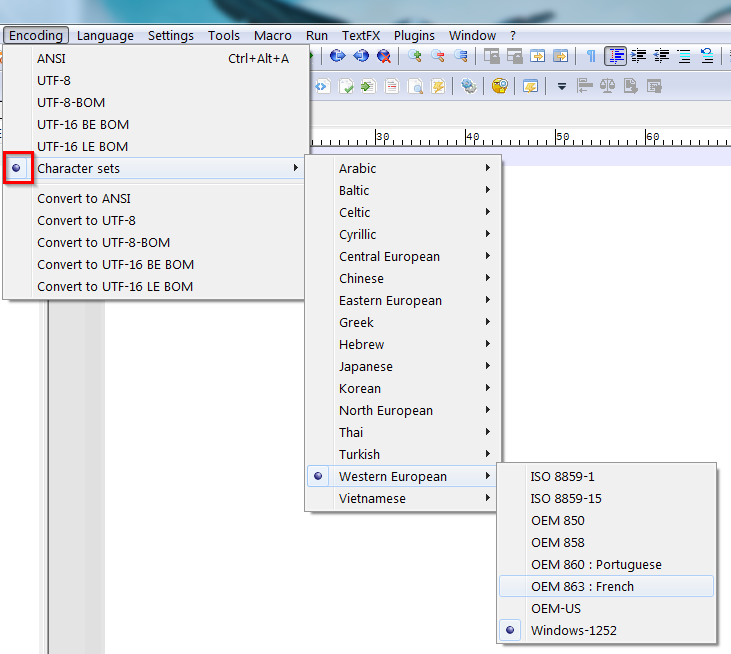

If I “get” a file into “ANSI” mode in Notepad++, shown in the Encoding menu thusly:

and on status bar like so:

Then it seems like it should be “in” my current ANSI codepage as shown by Debug Info:

And indeed the characters that I see if I have a file of characters/bytes with values from 128-255 seem to be the ones from HERE, so that’s good…

But I’m curious why then this isn’t shown with a round checkmark in the menus:

because isn’t that what I have?

(I have more questions but let’s see where this exploratory one goes…)

-

Technically, yes, but does it make sense to add it there as well?

I don’t think so.

If I search for the current encoding via the menu,

I would return immediately if I saw that ANSI was selected.

But that’s me, no idea what others would do.Curious, what are the other questions?

-

Hello, @alan-kilborn, @ekopalypse and All,

To Alan :

Yes, you’re just right about it ! And I would add that this mark should be in all sub-entries, too, UNDER

Character Sets, as below :Character Sets • Western European • Windows-1952Thinking about it, we could use, for instance, the equal sign

=, instead of the•character to show what is the defaultANSIcode page !It would be a shortcut of the expression

ANSI = Windows-1252;-)Giving this scheme :

Character Sets = Western European = Windows-1952Which would clearly show that, with a specific Windows localization, the term

ANSIencoding represents the Western European encoding namedWindows-1252:-)

To All,

The

default ANSI code pageis the default encoding, used by Windows, when dealing withone-byteencoded files, so for all theNON Unicodeencoded files.In this case, each encoding simply assigns which characters must be displayed for the

256possible codes. Actually, for the last128codes, between\x80and\xFF, as the first128characters just represent the universalASCIIcharacter set, present in practically all existing encodings !Remember that, when you decide to choose an other NON-Unicode encoding, with the

Encoding > Character Sets > ...option, Notepad++ does not change the current file contents and simplyre-interpretsall the codes, between\x80and\xFF, in order to display the appropriate character, relative to that specific encoding !Best Regards,

guy038

-

why then this isn’t shown with a round checkmark in the menus

If you select

Windows-1252from that “sub-submenu” it looks like this:

So, your choice in the “sub-submenu” is reflected by a checkmark besides the

Character setsentry of the uppermost menu level. Since the menu entries at this menu level work like radio buttons, it is not possible to check bothANSIandCharacter setsentries at the same time.we could use, for instance, the equal sign =, instead of the • character to show what is the default ANSI code page

Sorry, we can not because the • symbol is the one wich is used in Windows menus to indicate the active option out of a set of exclusive options (radio button functionality).

I know, this is only a technically explanation. But I think you asked the wrong question. The real question should be:

Why is there this

ANSImenu entry and additionally all theseWindows-XXXXentries in the “sub-submenus”?The answer to this question might be:

It is more user-friendly to have a menu entry at the uppermost menu level that indicates the current character encoding as long as it is set to one of these so called ANSI code pages.

Depending on Windows’ locale,

ANSIis the same asWindows-1252(e.g. in the US and Western European countries like Germany, France and Spain), in other countriesANSIis the same asWindows-1250(e.g. in Poland, Czechia, Romania, Hungary) and in Russia and Ukraine it is the same asWindows-1251.But to be exact, the naming ANSI for whatever Windows code page is a misnomer since the ANSI (American National Standards Institute) never released the character encoding standards that are called ANSI nowadays. For further details one can read >> this Wikipedia article <<<.

To verify my statement from above regarding “what means

ANSIencoding in different countries” one can do a little test. Copy the following code and paste it in Notepad++ into a new ANSI encoded file with filename extension.vbs, for exampleCharTableGenerator.vbs:Option Explicit Dim objFSO, objWshShell, objOutFile Dim strDesktopFolder, strOutFilePath, intChr Set objFSO = CreateObject("Scripting.FileSystemObject") Set objWshShell = CreateObject("WScript.Shell") strDesktopFolder = objWshShell.SpecialFolders("Desktop") strOutFilePath = objFSO.BuildPath(strDesktopFolder, "CharTable.txt") Set objOutFile = objFSO.CreateTextFile(strOutFilePath, True, False) For intChr = 128 to 255 objOutFile.Write Chr(intChr) If intChr mod 8 < 7 Then objOutFile.Write " " Else objOutFile.Write vbCrLf End If Next objOutFile.CloseRun this code by double-clicking its file. The code generates a new file named

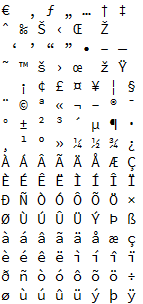

CharTable.txtat your Windows Desktop. Open the file created by the script with Notepad++.On systems with ANSI code page set to Windows-1252 (USA, Germany, France, Spain and others) it should look like this:

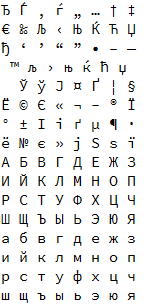

On systems with ANSI code page set to Windows-1251 (Russia, Ukraine and maybe others) it should look like this:

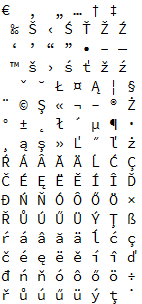

On systems with ANSI code page set to Windows-1250 (Poland, Czechia, Romania, Hungary and others) it should look like this:

-

@Ekopalypse said:

Curious, what are the other questions?

Patience, I have many! :-)

But at the moment I will concentrate on responding to the replies from my first query.@guy038 and @dinkumoil said:

And I would add that this mark should be in all sub-entries, too, UNDER Character Sets , as below

So, your choice in the “sub-submenu” is reflected by a checkmark besides the Character sets entry of the uppermost menu level.

My screenshot with the red dot could have been better, i.e., more like @dinkumoil 's.

@dinkumoil said:

But I think you asked the wrong question. The real question should be:

Why is there this ANSI menu entry and additionally all these Windows-XXXX entries in the “sub-submenus”?Yes, perhaps…but when one has questions about potentially confusing things, it might be hard to formulate the best question as a starting point.

The other information by @guy038 and @dinkumoil is good, but largely known to me, but certainly OK to inform others as part of this larger discussion. I do understand “encoding” itself, so we don’t need to go down that road, unless a discussion helps someone else.

So something that @dinkumoil touches on, and is another question of mine, is “what settings in the Preferences are related to encoding?” One would think this should be easily answered, but I’m not so sure.

In @dinkumoil 's screenshot we have the “New Document” settings. It seems like these settings should be when we create a new document with Ctrl+n or File > New. It is not clear that these settings effect loading of an existing file. And a real stunner: I had no idea that Default language setting might affect things.

There’s also Autodetect character encoding on file open on the MISC. tab of the Preferences.

What is being autodetected here?

Encodings with BOM shouldn’t need this, right? I mean, why try to go against what the BOM is telling you?

So are we left with attempting to autodetect between a BOMless UTF-8 and ANSI/Character Set?The N++ user manual seems a bit light on these topics.

I’m a bit hesistant to put out more questions right now, for fear of sending the discussion off to the “wrong place”. :-)

-

It’s even more complicated than that, if I remember correctly.

Documents like html and xml can specify encoding in tags and npp recognizes and respects this.

Sessions also have the encoding of files.

Detection, when enabled, is done as a last ditch effort to determine encoding when all else has failed before. -

@Ekopalypse said in Non-unicode encodings discussion:

Sessions also have the encoding of files.

So this is what allows Notepad++ to “remember” a file’s “character set” chosen by the user.

As long as the user doesn’t close the file (remove it from the active session), they wouldn’t have to re-choose the correct ANSI “encoding” (if using one that is not their default, e.g. -1252)?It’s even more complicated than that, if I remember correctly.

Documents like html and xml can specify encoding in tags and npp recognizes and respects this.

Detection, when enabled, is done as a last ditch effort to determine encoding when all else has failed before.Maybe it is time to dive into the code?! :-)

-

@Alan-Kilborn said in Non-unicode encodings discussion:

In @dinkumoil 's screenshot we have the “New Document” settings. It seems like these settings should be when we create a new document with Ctrl+n or File > New. It is not clear that these settings effect loading of an existing file. And a real stunner: I had no idea that Default language setting might affect things.

You are right, these settings are only related to creating new documents, not to opening existing documents. I edited my posting above accordingly.

There’s also Autodetect character encoding on file open on the MISC. tab of the Preferences.

What is being autodetected here?

Encodings with BOM shouldn’t need this, right? I mean, why try to go against what the BOM is telling you?

So are we left with attempting to autodetect between a BOMless UTF-8 and ANSI/Character Set?As I wrote in my last posting, there is no ANSI encoding. What the menu entry

ANSIexactly means depends on the locale of Windows. On my german Windows I can set Czech or Russian locale (that’s the way I made the screenshots of fileCharTable.txtin my last posting) and still have german text in Windows dialog boxes. But the locale affects the interpretation of file content, which has been classified as ANSI by automatic encoding detection.Automatic encoding detection uses heuristics to determine the character encoding actually used when the file was stored to disk by its original author/maintainer. This works by inspecting a block of certain size from the beginning of the file and trying to find patterns that indicate a certain character encoding. And because there are not only Windows-XXXX encodings but also ISO 8859-X and OEM XXX, all different from each other, this is a really hard job and sometimes fails.

-

@Alan-Kilborn said in Non-unicode encodings discussion:

Maybe it is time to dive into the code

To get the whole picture, I think so.

The last time I tried, I got confused very quickly. :-( -

@dinkumoil said in Non-unicode encodings discussion:

for example CharTableGenerator.vbs

For Pythonists that might want to generate the same data, here’s something short to make them feel comfortable:

number_list = list(range(128, 255 + 1)) with open('CharTable.txt', 'wb') as f: f.write(bytearray(number_list))