Notepad++ v8.2.2 Release Candidate

-

Notepad++ v8.2.2 Release Candidate is available for testing:

http://download.notepad-plus-plus.org/repository/8.x/8.2.2.RC/Notepad++ v8.2.2 new features and bug-fixes:

- Remove 2GB file open restriction for x64 binary

The reason to release this new version with only 1 enhancement & so quickly just after v8.2.1, is the large amount of modification for 2GB+ files manipulation. Such large modification could have consequence of regression, hence this release with only one improvement.

@xomx has had some crashes with his build (thank him for all his help to bring this release here anyway!) but I didn’t have any. Please test x64 binary with 2GB+ files, and let me know if you found any problem.

Thank you and enjoy!

-

D donho pinned this topic on

-

@donho ,

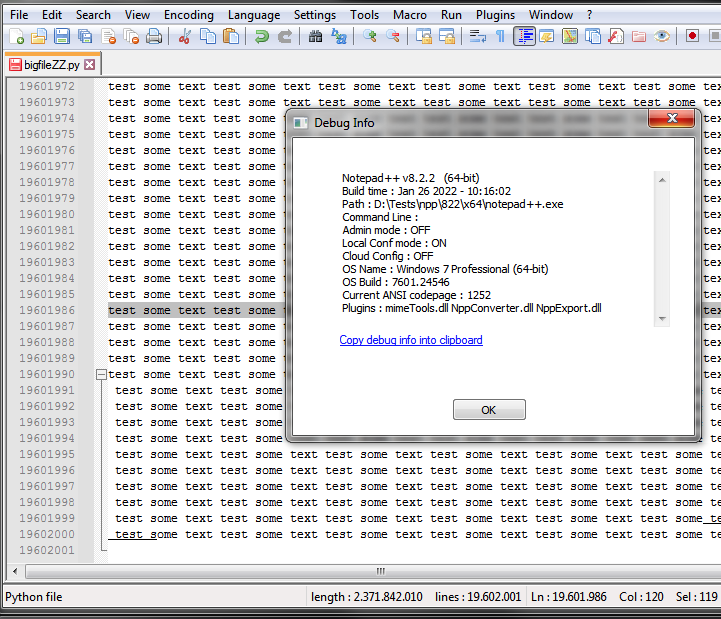

I took a fresh unzip of v8.2.2-64bit portable, and created a file with exactly 3e9 characters (codes 32-127, so nothing fancy), called it

big.bin, and tried to open with either draggingbig.bininto the Notepad++ window, or using File>Open, or in Windows explorer dragging thebig.binonto the portable notepad++.exe. However I do it, Notepad++ warns me that it might take several minutes, I say yes, then about 30-60 sec later, Notepad++ crashes.I tried renaming the file to

big.txtinstead, to make sure that no syntax highlighter was trying to fire, and it still crashed. :-(Sorry I don’t have better news for you.

-

I opened a 2.2 GB txt file that took ~20 seconds to load.

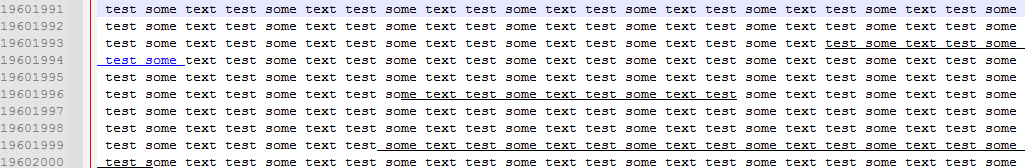

I edited the file at the bottom, added a space, in column mode, see screenshot, which took ~30 seconds, and then scrolled up and down. Suddenly links (?) appeared.

Another thing is that it creates a backup in default mode.

Renamed the file to .py and there were no noticeable delays due to a lexer. Is this possibly blocked when opening such a large file?

No crashes so far.

One thing I noticed is that Npp initially allocated ~5.8GB of RAM, but then settled down to ~2.6GB.

-

@ekopalypse said in Notepad++ v8.2.2 Release Candidate:

One thing I noticed is that Npp initially allocated ~5.8GB of RAM, but then settled down to ~2.6GB.

Hmm, I only have 4G remaining of my 8G total. It spikes to 100%, drops down to about 60% (so still using some for this file), then crashes. It might be that Windows and Notepad++ together couldn’t come to an agreement about how to allocate memory and page it successfully. So I might just be resource-limited, hence my crashes.

(I also tried turning off auto-backups, but it still crashed.)

Since I don’t need to edit large files for anything I do (home or work), this was more trying to help Don out, rather than see if I can “finally” edit large files with Notepad++.

If large-file editing is working for some users, that’s significantly better than “64-bit Notepad++ is incapable of loading a file >2GB, for any users, by design”.

-

@peterjones said in Notepad++ v8.2.2 Release Candidate:

Since I don’t need to edit large files for anything I do (home or work), this was more trying to help Don out, rather than see if I can “finally” edit large files with Notepad++.

Me too :-), sorry, I should have answered Don directly to make it clear.

I also don’t see ever having to use files that large, and yes, it could be that the crashes occur because memory is running low. I assume that is most likely the cause. -

I should add:

- before testing switch off: word wrapping, session snapshots and periodic backups, backups on save

- if you want to test writing to a large file, switch off also the auto-completion feature (@Ekopalypse )

There is currently a 200MB file syntax styling limit, so do not expect colorized Scintilla output for e.g. a 201MB *.cpp file:

- up to 200MB you can expect 2x filesize + some extra memory consumption

- above the 200MB it should be only 1x filesize + some extra memory

Crash is expected, when your big file does not contain any EOLs (CR/LF) - Scintilla limitation. So e.g. an empty 2GB+ file full of NUL-bytes crashes.

Memory spikes are ok.

Do not try to open a big file on a system, where the available physical memory is lower than approx. 2GB + filesize. This can easily lead to unresponsive system, because of the OS virtual memory mechanism.

-

@xomx said in Notepad++ v8.2.2 Release Candidate:

when your big file does not contain any EOLs (CR/LF) - Scintilla limitation

That would explain it. When I added newlines, I could open a >2GB file.

-

I’ve not tried this, but I have a couple of observatons.

- If lack of memory is the problem, then you should be hearing from Windows to that effect as it tries to help, usually by closing something you’d rather leave open.

- This huge file editing ability seems to come with several significant caveats, which makes me think it should be something which has to be specifically enabled, and when you try to do so, you have to acknowledge the caveats (or you’ll just get multiple reports about them.)

-

D donho referenced this topic on

-

Thank you guys for your tests and suggestions.

Regarding your suggestion, here is RC2 with some enhancement:

https://community.notepad-plus-plus.org/topic/22446/notepad-v8-2-2-release-candidate-2 -

D donho unpinned this topic on

-

D donho locked this topic on

-

D donho referenced this topic on