Exporting pages from Hits?

-

@rjm5959 said in Exporting pages from Hits?:

Also, unfortunately, I need to turn this over to non tech people on a team that will use this every month end on these massive files so I need something fairly simple that they can use. From meetings with them, they were only familiar with Notepad and I had them install Notepad++ which is far better for this type of searching and extracting…

Then — as Peter Jones already said in a different reply — Notepad++ is the wrong tool.

I had assumed this was a one-off, “personal” task. If this is something done by multiple people every month as part of an organization, there is no way around this:

You need to enlist a programmer to automate this task for you. This is not a job for end-users to do with off-the-shelf tools.

The good news is, it’s an easy — almost trivial — programming task. You won’t need a team, or a highly-experienced senior programmer; any competent coder should be able to do this, possibly in a single afternoon. Just be sure you’re clear on what you need and what you want (best to write it down as a requirements document), and that you have one or more real source files available for the programmer to use, before you start.

Then it will take moments of your people’s time, instead of hours, every month. Also, consider this: If you and your organization are willing to spend that much of people’s time every month to do this, it must be important. Doing this manually, repeatedly, will be error-prone. A custom-coded program will let the computer do the repetitive, time-consuming and error-prone part.

-

@Coises said in Exporting pages from Hits?:

lso, consider this: If you and your organization are willing to spend that much of people’s time every month to do this, it must be important.

Moreover, since it was taking at least 8 hours per month, total, then paying a contractor for up to 1 week of time to write the program and make sure it’s working for you would be a net win after a year or less.

-

Thanks guys. I will look into having someone create something for this. They weren’t very receptive when I brought this up previously and that’s why I had the vendor split out these reports separately to download and use. A big part of the issue with slow extraction from Synergy is that Synergy was moved from On Prem to the Azure cloud so that increased the time it takes to extract substantially. I was able to make some changes within Synergy to make the search much faster, but the extraction is still very slow. Not much improvement there. I have a case open for that also. Probably not much that can be done for that either. I was hoping there was a way to get around the limit…

-

Hello @rjm5959, @alan-kilborn, @peterjones, @coises and All,

@coises, you said :

It’s possible that I or someone else (@guy038, where are you?) will think of a way to write the regular expression …

Well, I’m not very far from my laptops ! But these last days, I was searching for an [ on-line ] tool which could extract the color triplets ( RxGxBx) of all pixels of an JPG image, without success :-(( The best I could find was an interesting on-line tool :

https://onlinejpgtools.com/find-dominant-jpg-colors

which give a fair estimation of the main colors of any .jpg image uploaded. However, although you can choose the number of individual colors in the palette, it cannot list all the pixels, of course !

Thus, I’ll probably e-mail @alan-kilborn about a Python solution !

Let’s go back to @rjm5959’s goals ! However, from the last posts, I suppose that you gave up the N++ way !

Personally, I think that the @coises’s regex, in order to delete any page which does not contain any key-word, is quite clever and should work, even with very huge files as it only grabs a page amount of text, at a time !

So, @rjm5959, let’s follow this road map :

-

Do a copy your original file

-

Open this copy in Notepad++

-

First, verify if the first page, ( before a first

FFchar ) contains one of the expressions (United BankorNationstar) -

If it’s true, add a

~character right before the expression -

Secondly, do the same manipulation for the last page of your file ( after the last

FFcharacter ) -

Thirdly, open the Replace dialog (

Ctrl + H) -

SEARCH

(?-i)United Bank|Nationstar

~ REPLACE

~$0-

Check the

Wrap aroundoption -

Select the

Regular expressionoption -

Click on the

Replace Allbutton

=> It should be done quickly and it adds a

~character, right before any keyword (United Bank,Nationstar, … ) which is separated with the alternation symbol|, in the regexNote that I suppose that the search is sensitive to the case. Else, simply use the regex

(?i)United Bank|Nationstarfor a search whatever the case-

Now, we’ll execute this second S/R :

-

SEARCH

\x0C[^~\x0C]+(?=\x0C) -

REPLACE

Leave EMPTY -

Keep the same options

-

Click on the

Replace Allbutton

=> Depending on the size of your copy, it may take a long time before achieving this part ! Be patient ! At the end, you should be left with only :

-

All the pages containing at least one

United Bankexpression -

All the pages containing at least one

Nationstarexpression

BTW, @coises, I don’t think that the atomic structure is necessary , because, anyway, the part

[^~\x0C]+(?=\x0C)concerns a non-null list of characters all different from\x0Cand which must be followed with a\x0Cchar. Thus, the regex engine will always get all chars till a next\x0Cchar. It will never backtrack because it always must be followed with a\x0Cchar !-

Finally, run this trivial search/replace :

-

SEARCH

~ -

REPLACE

Leave EMPTY

If the second search/Replace

\x0C[^~\x0C]+(?=\x0C)is too complex, you could try to slice this copy in several files and retry the method on each part !I, personally, created a file, from the

License.txtfile, with aFFchar every60lines, giving a total of4,335pages, whose1,157contained the~character, Before using the\x0C[^~\x0C]+(?=\x0C)regex, the file had a size of about48 Mo. After replacement,15seconds later, it remained all the pages containing this~char only, so a file of about69,420lines for a total size of13 Mo:-)Best Regards,

guy038

-

-

Hi, @rjm5959, @alan-kilborn, @peterjones, @coises and All,

I must apologize to @coises ! My reasoning about the necessity or not to use an atomic group was completely erroneous :-(( Indeed, I would have been right if the regex would have been :

\x0C[^\x0C]+(?=\x0C)But, the @coises regex is slightly different :

\x0C[^~\x0C]+(?=\x0C)And because the

~character belongs to the negative class character[^.......]too, the fact of using an atomic group or not, for the pages containing the~character, is quite significant ! Indeed :-

The normal regex

\x0C[^~\x0C]+(?=\x0C)would force the regex engine, as soon as a~is found, to backtrack, one char at a time, up to the first character of a page, after\x0C, in all the lines which contain the~character. Then, as the next character is obviously not\x0C, the regex would skip and search for a next\x0Cchar, further on, followed with some standard characters -

Due to the atomic structure, the enhanced regex

\x0C[^~\x0C]++(?=\x0C)would fail right after getting the~character and would force immediately the regex engine to give up the current search and, search, further on, for an other\x0Ccharacter, followed with some standard chars !

Do note that, if the

~character is near the beginning of each page\x0C, you cannot notice any difference !I did verify that using an atomic group reduce the execution time, for huge files ! With a

30 Mofile, containing159,000lines, whose1,325contains the~char, located4,780chars about after the beginning of each page, the difference, in execution, was already about1.5s!!As a conclusion, @rjm5959, the initial @coises’s regex

\x0C[^~\x0C]++(?=\x0C)is the regex to use with files of important size ;-))BR

guy038

-

-

This post is deleted! -

@guy038 said in Exporting pages from Hits?:

Hi, @rjm5959, @alan-kilborn, @peterjones, @coises and All,

I must apologize to @coises ! My reasoning about the necessity or not to use an atomic group was completely erroneous :-(( Indeed, I would have been right if the regex would have been :

\x0C[^\x0C]+(?=\x0C)But, the @coises regex is slightly different :

\x0C[^~\x0C]+(?=\x0C)And because the

~character belongs to the negative class character[^.......]too, the fact of using an atomic group or not, for the pages containing the~character, is quite significant ! Indeed :-

The normal regex

\x0C[^~\x0C]+(?=\x0C)would force the regex engine, as soon as a~is found, to backtrack, one char at a time, up to the first character of a page, after\x0C, in all the lines which contain the~character. Then, as the next character is obviously not\x0C, the regex would skip and search for a next\x0Cchar, further on, followed with some standard characters -

Due to the atomic structure, the enhanced regex

\x0C[^~\x0C]++(?=\x0C)would fail right after getting the~character and would force immediately the regex engine to give up the current search and, search, further on, for an other\x0Ccharacter, followed with some standard chars !

Do note that, if the

~character is near the beginning of each page\x0C, you cannot notice any difference !I did verify that using an atomic group reduce the execution time, for huge files ! With a

30 Mofile, containing159,000lines, whose1,325contains the~char, located4,780chars about after the beginning of each page, the difference, in execution, was already about1.5s!!As a conclusion, @rjm5959, the initial @coises’s regex

\x0C[^~\x0C]++(?=\x0C)is the regex to use with files of important size ;-))BR

guy038

Thanks Guy038. I will give this a try. The file I have is 7.7 million lines and the page hits will be 89,984 for nationstar. Each page is 57 lines so that’s about 5.1 million lines. Will this work with that much data?

-

-

This post is deleted! -

@guy038 said in Exporting pages from Hits?:

Hi, @rjm5959, @alan-kilborn, @peterjones, @coises and All,

I must apologize to @coises ! My reasoning about the necessity or not to use an atomic group was completely erroneous :-(( Indeed, I would have been right if the regex would have been :

\x0C[^\x0C]+(?=\x0C)But, the @coises regex is slightly different :

\x0C[^~\x0C]+(?=\x0C)And because the

~character belongs to the negative class character[^.......]too, the fact of using an atomic group or not, for the pages containing the~character, is quite significant ! Indeed :-

The normal regex

\x0C[^~\x0C]+(?=\x0C)would force the regex engine, as soon as a~is found, to backtrack, one char at a time, up to the first character of a page, after\x0C, in all the lines which contain the~character. Then, as the next character is obviously not\x0C, the regex would skip and search for a next\x0Cchar, further on, followed with some standard characters -

Due to the atomic structure, the enhanced regex

\x0C[^~\x0C]++(?=\x0C)would fail right after getting the~character and would force immediately the regex engine to give up the current search and, search, further on, for an other\x0Ccharacter, followed with some standard chars !

Do note that, if the

~character is near the beginning of each page\x0C, you cannot notice any difference !I did verify that using an atomic group reduce the execution time, for huge files ! With a

30 Mofile, containing159,000lines, whose1,325contains the~char, located4,780chars about after the beginning of each page, the difference, in execution, was already about1.5s!!As a conclusion, @rjm5959, the initial @coises’s regex

\x0C[^~\x0C]++(?=\x0C)is the regex to use with files of important size ;-))BR

guy038

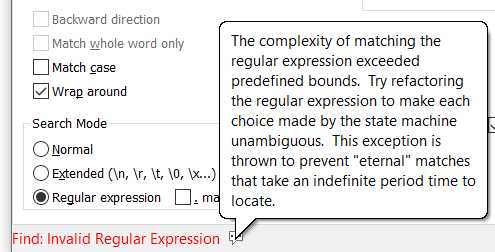

Couldn’t get this to work, but it’s far too complicated anyway for non tech people to run. We are now receiving the data without the FF. Now the header page has 1 like below followed by S3001-54D. I have the below that I’m using to search for the header and all the details within that page and works when I start at the first hit and click on ‘Find Next’, but as I’ve said, there’s over 80,000 hits. When I search the whole file for all hits to Find or Mark, I get the “Find: Invalid Regular Expression” message. Is there any way to get this to loop and get all the hits and export? Maybe creating a Macro? I need something simple that non tech people can run every month end.

(?s)^1((?!^1).)?nationstar.?^1

1S3001-54D CENTRAL LOAN ADMINISTRATION 04/01/24

LETTER LOG HISTORY FILE FOR NATIONSTAR MORTGAGE LLC1 PAGE 754940e70341-916a-47b8-8bdf-e00f2a5ccf01-image.png

-

-

This post is deleted! -

-

@rjm5959 The average car can be driven at 100 MPH (160 km/h). Is that a good idea? Likewise, there are things you may be able to do in a regular expression under ideal circumstances that are a bad idea when the road gets twisty with obstacles.

Your project seems much more suited for something coded in a scripting or compiled language. This makes it easy to break the project down into small easy to understand and debug pieces. When there is a change in the requirements, or your understanding of the problem, it’s usually quite easy to make tweaks to a scripted/compiled solution.

It’s possible this Synergy thing you mentioned also supports scripting.

-

@mkupper This is easily done in Synergy and the hits and pages are found quickly. The issue is when exporting. When exporting these 89,000 hits it’s about 5.5 million lines and takes many many hours to export. During regular business hours it’s excruciating. It can run all day and night to pull this much data. The issue is compounded because we moved our Synergy servers and data to the cloud from on prem. When everything was on prem, the extract ran faster, but it was still slow. Not nearly as bad as now.

-

@rjm5959 said in Exporting pages from Hits?:

@mkupper This is easily done in Synergy and the hits and pages are found quickly. The issue is when exporting. When exporting these 89,000 hits it’s about 5.5 million lines and takes many many hours to export. During regular business hours it’s excruciating. It can run all day and night to pull this much data. The issue is compounded because we moved our Synergy servers and data to the cloud from on prem. When everything was on prem, the extract ran faster, but it was still slow. Not nearly as bad as now.

See if Synergy offers server side scripting. If so, you can filter or reformat the data prior to exporting or downloading it. If there is still a desire or need to have many thousand or millions of lines in the export then look into parsing, filtering, and/or reformatting the data using PythonScript. That will allow you to mix-n-match the benefits of a procedural language and regular expressions.

-

@rjm5959 said in Exporting pages from Hits?:

1S####-##C CENTRAL LOAN ADMINISTRATION YY/MM/DD

LETTER LOG HISTORY FILE FOR NATIONSTAR MORTGAGE LLC1 PAGE ####I know nothing about the context in which you’re using this data, but are you sure that you’re not leaking confidential data to the internet by posting this here? Because if you are, consider anonymizing it.