Chinese Characters saved with Notepad+ turn Unicode in another editor

-

I can’t figure out what setting I’m missing. I realize that character encoding is really hard-stuff.

I’m using Visual-Studio 2008. And VS can read and save Asian characters. But when I edit files with Notepad++ the 2-byte Asian Chinese characters are readable and savable within Notepad++ but then they are corrupted for other editors. I say corrupted. Because then I reopen the same files after they are saved with NP+ they they come out ‘Unicode’ and/or gobbledygook in VS. I think VS-08 uses UTF, but I’m not an expert. I’m speaking specifically of comment lines in VS. I don’t know what it would do to web-text if I had any with 2-byte characters. In the future we will have Chinese web-text.I’ve tried many different encoding settings, but nothing seems to work. If I use NP+ to save it has the same problem.

-

Correction:

Excuseme: They are not ‘unicode’. They are something other-worldly. This: ‘获得是第几个’ gets turned into this ‘»ñµÃÊǵڼ¸¸ö’ -

And if you edit Chinese in Visual-Studio, what happens?

-

Do you know what encoding VS-08 uses when it saves the file successfully with the correct Asian Chinese characters? There should be a setting someplace that defines such things.

Whatever encoding that is, you will need to set the same in Notepad++. (You might also have to turn off Settings > Preferences > MISC > Autodetect character encoding, because there are known issues with that in recent NPP versions.)

-

@PeterJones said:

@Robert-Koernke ,

(You might also have to turn off Settings > Preferences > MISC > Autodetect character encoding, because there are known issues with >that in recent NPP versions.)

I think that was it. I’ve tested saving and opening after turning that off, and it works.

To answer your question. I’m 98% sure it is UTF-8-BOM. -

Sorry it looked like the last entire post was from @PeterJones . I’m learning how to quote and stuff on this site.

-

If it’s UTF-8-BOM in Visual Studio, then I see no reason why Notepad++ would be messing it up. If there’s a BOM, NPP will know it’s UTF-8, and it will save it again in UTF-8-BOM.

Pasting your text, set Encoding > Convert To UTF-8-BOM, and saving with Notepad++.

获得是第几个Then use an external hex dumper to show the 21 bytes in the file:

00000000: efbb bfe8 8eb7 e5be 97e6 98af e7ac ace5 ................ 00000010: 87a0 e4b8 aa .....Looking up the UTF-8 representation of each

BOM = EF BB BF https://en.wikipedia.org/wiki/Byte_order_mark#Byte_order_marks_by_encoding 获 = E8 8E B7 http://www.fileformat.info/info/unicode/char/83b7/index.htm 得 = E5 BE 97 http://www.fileformat.info/info/unicode/char/5f97/index.htm 是 = e6 98 af http://www.fileformat.info/info/unicode/char/662f/index.htm 第 = e7 ac ac http://www.fileformat.info/info/unicode/char/7b2c/index.htm 几 = e5 87 a0 http://www.fileformat.info/info/unicode/char/51e0/index.htm 个 = E4 B8 AA http://www.fileformat.info/info/unicode/char/4e2a/index.htmSo all of the UTF-8 representation are exactly translated into the hexdump of the file in Notepad++.

This is the correct 21 bytes for a UTF-8-BOM file with those six codepointsI can open and close, add a space, delete it, resave – do that as many times as I want, and it doesn’t change the file.

I opened that file in MSWord: it asked me to convert file from “Encoded Text”,

Other Encoding= “Unicode (UTF-8)”, and the preview and the final result in Word was the same six glyphs.

Open it with WordPad: it shows those same six glyphs.If you open that exact file in VS, and it shows anything but that, then VS isn’t expecting and/or cannot handle UTF-8-BOM.

So, try pasting your text into a fresh file in Notepad++, Encoding > Convert to UTF-8-BOM, save. Then try opening the file in VS. It should be right.

Also, try pasting those 6 glyphs into VS, and saving, then use a hex dumper[1] to dump the saved VS file, and show us the results

----

[1]: If you don’t have a hex dumper, but since you do have VS available, I assume you could compile this C code:#include <stdio.h> int main(int argc, char**argv) { int c, i; FILE* fp; if(argc<2) { printf("usage: %s <filename>", argv[0]); return(0); } if(NULL==(fp = fopen(argv[1], "rb") ) ) { perror("could not open file"); return(1); } while( EOF != (c = fgetc(fp)) ) { printf("%02x ", c); if(++i % 16 == 0) printf("\n"); } return(0); } -

i’m glad that disabling autodetect character encoding worked for your case, thanks for reporting back 👍

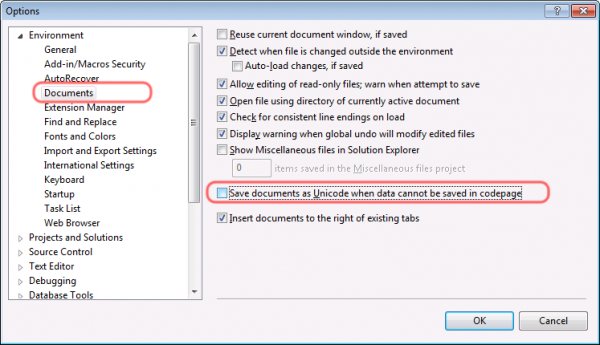

i think, vs2008 uses the default codepage of the current localization, unless “save as unicode …” is selected at the documents options.

if it is selected, it will add a bom, but only to files that don’t match the current windows language codepage as i can recall.

If it’s UTF-8-BOM in Visual Studio, then I see no reason why Notepad++ would be messing it up. If there’s a BOM, NPP will know it’s UTF-8, and it will save it again in UTF-8-BOM.

it should, but i also had the problem once, that utf-8-bom was not correctly loaded if auto detect encoding was enabled. easy to spot, as the encoding bullet was somewhere nested inside the character sets menu instead of having the bullet at utf-8-bom.

maybe we should verify some tests with bom, to check if it’s the same result if autodetect character encoding is activated.

(i guess most regulars have currently disabled autodetect, until the uchardet 0.0.6 implementation is fixed, so we’d need to reenable it for some testing)