Search in folder (encoding)

-

I am sorry to be the bearer of bad news: The file you are showing in that video does not appear to be a text file.

With all those black-boxed characters, it appears to be a binary file that happens to have some of its information look like text. Notepad++ cannot be expected to handle any arbitrary binary data that you throw at it, whether or not that binary data happens to contain some number of text strings. The non-text bytes interfere with its ability to do the job Notepad++ was designed to do: edit text. In some situations, you might be able to abuse the application to edit the text included in the binary file, but you have pushed beyond that.

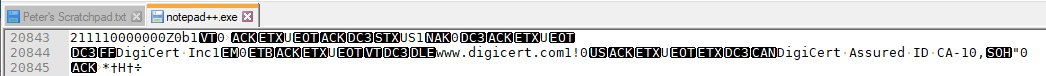

Just because something can be loaded in Notepad++, and even just because you can read some of the text from that file in Notepad++, does not mean that the underlying file is actually a plaintext file. For example, when I look at

notepad++.exein Notepad++, I can find areas that look quite similar to what is shown in your video:

– it’s got plain text, that I can absolutely read, and be confident that it was intended to be text… but that’s really an excerpt of bytes from an executable file, not from a text file.

– it’s got plain text, that I can absolutely read, and be confident that it was intended to be text… but that’s really an excerpt of bytes from an executable file, not from a text file.Expecting a meaningful search or search-and-replace result when using a text editor to edit non-text files is a rather unfair expectation, in my opinion.

see also this faq

You said “I could not, again reproduce the issue” just because you don’t meet the second condition.

Guy was showing results from the files you provided! Don’t complain to him for not meeting your condition when you supplied the file.

Getting back to my main point: while the video you showed does not appear to be a plain text file, the screenshots Guy showed of your example files does appear to be text. (I don’t download arbitrary zip files from users I don’t already trust, so I cannot verify myself that they are nothing more than plain text). But, if they really are text like it appears, then it is more reasonable to expect Notepad++ to be able to handle them.

In that case, if you wanted to share files that show the problem you are encountering, then share those, and maybe Guy or some other brave soul will download an arbitrary .zip and try to replicate your problem. If you believe that one or both of the files from that already-shared zip do show the problem, then Guy’s assessment disagrees with you, and you’ll have to explain again exactly how to replicate the problem with the files you shared.

-

@PeterJones, got it. If my files are not text files, so N++ cannot understand them properly and then there is no issue at all. Thank you, my apologies for being rude. But also I want to mention that I really provided problem file - “jackie_default_01.json” from “json.zip” archive. It’s equal to file in video. So your words “if you wanted to share files that show the problem you are encountering, then share those” sounds strange. Guy’s assessment disagrees with me because Guy ignores “jackie_default_01.json” file for some reason.

-

Ah, if you think only one file is not enough, here 5 more files for any brave soul.

-

Hi, @mayson-kword @peterjones, @alan-kilborn and All,

I did some tests and I draw these conclusions :

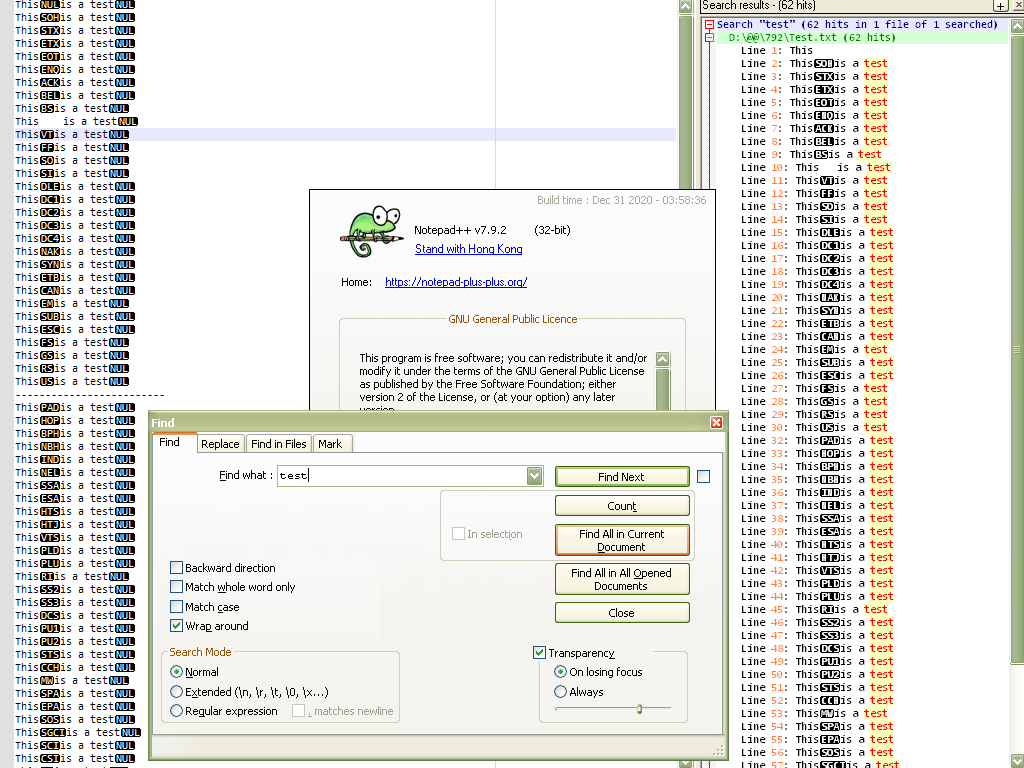

For a non pure text file, which can contain many control codes, in reverse video, including the

NULcharacter :-

Any line ends with either the first Windows

CRLF, the UnixLFor the MACCRcontrol code(s) -

In the

Findresult panel, anycontrol C1orControl C2character, except for theNUL, theLFand theCRcharacters are simply displayed as standard characters -

In the

Findresult panel, any line containing the search string is displayed till the firstNULchar met -

Thus, if any line, as defined above, just begins with a

NULcharacter, nothing is displayed, although it did find the search string for that line !

Demonstration :

I also verified that this behaviour occurs with

ANSIor anyUNICODEencoding and does not depend on the type ofEOLcharacters, too !So, @mayson-kword, unless you decide to work on a copy of your non-text files, in order to delete all the

\x00characters, it’s seems impossible to correctly get all the lines in theFind resultwindow :-((Best regards

guy038

-

-

@guy038, thank you a lot. There is no way to improve autodetect encoding feature, but you’ve done as much as possible, including advice to use BOM, that solves my issue very well.

Have a nice day, all of you are great.

-

Notepad++ assumes that a file has encoding, meaning, the entire content of the file is text (Unicode symbols) using a single encoding. Notepad++ does not try to support files where every paragraph has different encoding or files that are essentially binary with pieces of “text” at some encoding embedded here and there.

Having said that there are 2 major ways that Notepad++ could improve upon users experience in that regard that neither should be difficult to implement:

- If a specific encoding is not autodetected on opening a file Notepad++ will default to ansi encoding (that should be called ascii encoding). That was reasonable 20 years ago. It is unreasonable today. Utf-8 should be the default and since it is also backward compatible to ascii it should not hurt users.

- Notepad++ really needs the feature in the settings of “assume all files are of encoding XXX” where XXX is selected from a combo box. My guess is that a vast majority of Notepad++ users have all their relevant files in a single encoding and they don’t need for Notepad++ to autodetect it (guess) it if they can just tell it once.

-

No, afaik utf8 cannot replace ANSI code pages easily.

For example the bytec4isÄin cp1252 andДin cp1251

and invalid in utf8.But I agree, npp should have the possibility to let the user

force an encoding and it is, probably, a good idea to use utf8

as the default. -

Hello, @ekopalypse, and All,

You said :

For example the byte

c4isÄin cp1252 andДin cp1251

and invalid in utf8Eko, I not agree with that statement : a

C4byte can be found in anUTF-8file as it is the first byte of a2-Bytescoding sequence of the characters fromĀ(U+0100, coded asC4 80) tillĿ(U+013F, coded asC4 BF)Refer to the link and the table below :

https://en.wikipedia.org/wiki/UTF-8#Codepage_layout

•-------•-------•--------------------------------------------------------• | Start | End | Description | •-------•-------•--------------------------------------------------------• | 00 | 7F | UNIQUE byte of a 1-byte sequence ( ASCII character ) | | 80 | BF | CONTINUATION byte of a sequence ( from 1ST to 3RD ) | | C0 | C1 | FORBIDDEN values | | C2 | DF | FIRST byte of a 2-bytes sequence | | E0 | EF | FIRST byte of a 3-bytes sequence | | F0 | F4 | FIRST byte of a 4-bytes sequence | | F5 | FF | FORBIDDEN values | •-------•-------•--------------------------------------------------------•

I think that your reasoning is correct if we take, for instance, the individual

C1byte, which is :-

The

Ácharacter, in aWindows-1250/1252/1254/1258encoded file -

The

Бcharacter, in aWindows-1251encoded file -

The

Αcharacter, in aWindows-1253encoded file -

The

ֱcharacter, in aWindows-1255encoded file -

The

ءcharacter, in aWindows-1256encoded file -

The

Įcharacter, in aWindows-1257encoded file

…

- Always forbidden in an

UTF-8orUTF-8-BOMencoded file

Best Regards,

guy038

-

-

-

Hi Guy, how are doing? I hope you are doing well.

I replied that ANSI/ASCII can be replaced by utf-8.

ASCII can, but ANSI cannot.

My example was to show why it can’t be replaced.

Yes, C4 is valid as long as it is followed by another byte that forms a valid utf8 character.

Alone it is invalid. -

@Ekopalypse said in Search in folder (encoding):

No, afaik utf8 cannot replace ANSI code pages easily.

The terminology is confusing in general and Notepad++ is not helping.

There are modern encodings which can represent ANY Unicode symbol with various multibyte schemes.

There are legacy ascii encoding that can represent up to 256 symbols.

Every ascii encoding comes with a code page that defines different symbols for the range 128-255.

The symbols for 0-127 in ascii encoding (and utf8) are always the same. Let’s call them “plain English”.Ascii encodings should die. Notepad++ must open them but should discourage people from creating new ones by forcing an explicit choice to do so.

People that choose one of the modern encodings save themselves trouble later.

And for the many many people who can’t understand the concept of encoding Notepad++ should help by choosing the right default.Notepad++ default “ANSI encoding” is ascii encoding with some arbitrary code page.

Generally using ascii encoding without defining an explicit code page is equivalent to saying “I only care about plain English and don’t give a fuck about range 128-255”.Other “code pages” or “Character Sets” are not relevant to Notepad++ default. Users who want them need to either select them manually or let the autodetect guess it. Does it even work? How accurate is guessing of a code page?

For people who are ok with “ANSI”, the majority belong to the "don’t give a fuck about 128-255 and they will be OK with utf8.

A minority that actually use “ANSI” and adds to the document symbols from the default code page will need to select it explicitly or hope that autodetect works. But they better off switch to a modern encoding anyway.

Even if the solution will not be 100% backward compatible it will benefit much more people than it would hurt. -

@gstavi said in Search in folder (encoding):

I agree with most of what you said, but I think there is a misunderstanding here about ANSI. (maybe it’s me)

It’s true, ANSI is used as a type of encoding, which it is not.

Instead, it is just an abbreviation for the codepage that was used to set up the operating system.

For one person it’s cp1252, for another it’s cp1251, and for the next it’s something else, and so on.

But GetACP returns this “setup” encoding and that is,

I assume, the one that is/was used by Windows users and is used by npp.

I think that makes sense.

Nevertheless, I think using unicode and especially utf8 makes more sense these days. -

@Ekopalypse said in Search in folder (encoding):

I think that makes sense.

It is a legitimate decision. And it makes sense … and in my (very personal) opinion it is awful.

Its bad for interoperability because transferring a file between 2 computers could end up badly.But my personal dislike is because I work on multilingual operating system where the other language is right-to-left Hebrew.

And it is unimaginably annoying when some application decides to do me a favor and adjust itself without asking.

I never want to see Hebrew on my computer unless I explicitly asked for it. The OS is obviously setup with English as primary language but FUCKING OneNote still decides to suddenly arrange pages right-to-left because the time zone is for Israel. And it feels random, unfixable and takes the control from me.Since users don’t explicitly choose codepage when they setup their system, using GetACP is just a guess. And if it misfires, users will not understand why because they are unaware that a choice was made for them. Don’t guess on behalf of the user if it can be avoided.

Side story: as can be expected I am sometimes the “tech person” for friends and family. I strongly refuse to service installations that are fully Hebrew. If you will ever open regedit on a Hebrew Windows and see all the English content aligned right-to-left you would lose all respect to Microsoft.

-

@gstavi said in Search in folder (encoding):

If you will ever open regedit on a Hebrew Windows and see all the English content aligned right-to-left you would lose all respect to Microsoft.

Maybe I should give it a try to finally be persuaded to switch to Linux :-D