Marked text manipulation

-

Suncatcher,

From what you said, I used the FOUR following rules :

-

If a line does NOT contain a string of THREE digits, followed by a DOT, this line is completely deleted

-

Any range of characters, ENDING a line, which does NOT contain a string of THREE digits, followed by a DOT, is deleted, too

-

Any string of THREE digits, followed by a DOT, is UNCHANGED

-

Any other single character is REPLACED by a single SPACE character

This leads to the following S/R, with the Regular expression search mode CHECKED :

SEARCH

(?-s)^\R|(?!.*\d{3}\.).+|(\d{3}\.)|(.)REPLACE

(?1\1)(?2 )For instance, from this example text, below :

134.170.110.48 85.33.98.0 - 85.33.99.255 185.33.220.38 200.25.6.78 65.55.52.23 5.155.52.23 12.3.8.145 185.33.220.0 - 185.33.223.255 1.23.137.2 1.2.3.4 25.155.52.153 67.42.95.0 - 67.42.95.99 31.53.61.99 - 31.53.61.100 58.33.99.0 - 58.33.101.1We would get the replaced text, below :

134.170.110. 185. 220. 200. 155. 185. 220. 185. 223. 137. 155. 101.

As you may notice, as expected, some lines have been deleted :

-

The lines without any three consecutive digits, at all

-

The lines with, ONLY, one block of three digits, at the END of a line, without the final DOT character

REMARK :

If you prefer to keep a blank line, in case NO block of three consecutive digits exists, in a line, just change the search regex to :

SEARCH

(?-s)(?!.*\d{3}\.).+|(\d{3}\.)|(.)This time, you would obtain :

134.170.110. 185. 220. 200. 155. 185. 220. 185. 223. 137. 155. 101.Best Regards,

guy038

-

-

@guy038 well, RegExp approach is not bad but seems to be disposable. I tried to adapt it to another pattern and that’s what I’ve got.

I set the pattern which consists of dot and two digits.

And rewrote your expressions accordingly -(?-s)(?!.*\.\d{2}\.).+|(\.\d{2}\.)|(.)

And that’s what it output to me

And that is obviously not the thing supposed to be there. To achieve our aim regexp should be rewritten every time. Pythonscript seems to be more universal and consistent approach.

-

Hi, Suncatcher and Scott,

Ah… Of course, my previous regex was much to closed to, your specific regex

\d{3}\.. In addition, I tried, hard, from what you said, to keep the exact position where the different matches were !So, I decided to run the Scott script to see how this script re-writes the different matches :-) BTW, Scott, very nice script for people, who does not worry about regex problems or details ;-))

So, starting from my original example text, below :

134.170.110.48 85.33.98.0 - 85.33.99.255 185.33.220.38 200.25.6.78 65.55.52.23 5.155.52.23 12.3.8.145 185.33.220.0 - 185.33.223.255 1.23.137.2 1.2.3.4 25.155.52.153 67.42.95.0 - 67.42.95.99 31.53.61.99 - 31.53.61.100 58.33.99.0 - 58.33.101.1The Scott’s script, put the following text, in the clipboard, in that form :

.17 .11 .48 .33.98 .33.99.25 .33.22 .38 .25 .78 .55.52.23 .15 .52.23 .14 .33.22 .33.22 .25 .23.13 .15 .52.15 .42.95 .42.95.99 .53.61.99 .53.61.10 .33.99 .33.10From this modified text, we are able to deduce that the script follows TWO main rules :

-

If two or more matches are adjacent, there are rewritten as a single unit, in a same line

-

As soon as two consecutive matches, are NON adjacent, there are displayed in two consecutive lines

Quite different than before, isn’t it ?!

I, then, realized that you can start, with my general regex S/R , exposed in my THIRD post, on that topic, which is :

SEARCH

(?s)^.*?(Your regex to match)|(?s).*\zREPLACE

(?1\1\r\n)With a minor modification, this new general S/R, below, will adopt the same output displaying, than the Scott’s script :-))

SEARCH

(?s)^.*?((?:Your regex to match)+)|(?s).*\z( GENERAL regex syntax )REPLACE

(?1\1\r\n)So, if we use your second regex

\.\d{2}, that we insert in the general regex syntax, above, we obtain the practical S/R:SEARCH

(?s)^.*?((?:\.\d{2})+)|(?s).*\zREPLACE

(?1\1\r\n)which gives, after replacement, the expected output text, identical to Scott’s script one !

NOTES :

-

Compared to my THIRD post, the second part, of this new general regex, has changed into :

((?:Your regex to match)+):-

It represents any consecutive and adjacent matches of your regex, which is stored as group 1 and output on a single line, followed by a line break

-

The inner parentheses ,

(?:Your regex to match), stands for a non-capturing group, containing a single match of your regex

-

-

The IMPORTANT and P.S. sections, of my THIRD post, are still pertinent

Best Regards,

guy038

P.S. :

I did an other tests, with your first regex

\d{3}\.and, also, with the simple regex\d\.\d, leading to the appropriate following S/R :SEARCH

(?s)^.*?((?:\d\.\d)+)|(?s).*\zREPLACE

(?1\1\r\n)After replacement, the resulting text is, as expected :

4.1 0.1 0.4 5.33.98.0 5.33.99.2 5.33.2 0.3 0.25.6 5.55.52.2 5.1 5.52.2 2.3 8.1 5.33.2 0.0 5.33.2 3.2 1.23.1 7.2 1.2 3.4 5.1 5.52.1 7.42.95.0 7.42.95.9 1.53.61.9 1.53.61.1 8.33.99.0 8.33.1 1.1and corresponds, exactly, to the text, generated by the Scott’s script :-))

-

-

So I’m currently trying to copy some multi-line (red)marked text out of a large (~70MB) file, and my Pythonscript technique for doing so (see earlier posting in this thread) works but is super-slow on a large file; it iterates through the file one position at a time (

pos += 1). Is there a faster way to code it, given the functions we have at our disposal for doing this? @Claudia-Frank , ideas? :-) -

See next post, this is wrong!

AFAIK usingindicatorStart()andindicatorEnd()is quite efficient finding marked locations. The code you posted above doesn’t seem to be utilizing this as efficiently as it could. I have no way of testing this following code but you should be able to do something like this:start = 0 end = 0 while True: start = editor.indicatorStart(SCE_UNIVERSAL_FOUND_STYLE, end) if start == 0: break end = editor.indicatorEnd(SCE_UNIVERSAL_FOUND_STYLE, start) accum_text += editor.getTextRange(start, end) + '\r\n'Again this hasn’t been tested so there may be corner cases you need to check for…such as usingstart + 1when callingindicatorEnd()but this is the gist of it. -

Woops sorry about the above, it was way off. Here is a small LuaScript which works (I’m sure you can easily translate it into Python)

SCE_UNIVERSAL_FOUND_STYLE = 31 start = editor:IndicatorEnd(SCE_UNIVERSAL_FOUND_STYLE, -1) while start ~= 0 and start ~= editor.Length do endd = editor:IndicatorEnd(SCE_UNIVERSAL_FOUND_STYLE, start) print(editor:textrange(start, endd)) start = editor:IndicatorEnd(SCE_UNIVERSAL_FOUND_STYLE, endd) endNote: The one major initial bug I know if is that it is incorrect if the very first character of the file is marked.

-

This is strange, isn’t it? You have to use IndicatorEnd to find the start position but it is like it is…

Cheers

Claudia -

Yeah I ran into this as well when modifying my DoxyIt plugin…the way I came to think of it now is that it finds the end of the range you specify by

pos. And technically a range that is not marked has an end…which is the start of the range you want…oh well :) -

yeah, :-) sounds … logical … some how . … still confusing :-)

And what makes it confusing even more, what you already said, is, that if you do

editor.indicatorEnd(SCE_UNIVERSAL_FOUND_STYLE, -1) you will get the end position.

Aahhhh :-DWhat I meant is about

Note: The one major initial bug I know if is that it is incorrect if the very first character of the file is marked.

Cheers

Claudia -

@dail , @Claudia-Frank :

Thanks for your inputs, I used the basic ideas but came up with my own Pythonscript version that is much faster than my original PS version on large files, and seems to correctly handle the oddities of the editor.indicatorEnd() function previously mentioned.

So here is RedmarkedTextToClipboard2.py:

def RTTC2__main(): SCE_UNIVERSAL_FOUND_STYLE = 31 # N++ red-"mark" feature highlighting style indicator number ind_end_ret_vals_list = [] ierv = 0 while True: ierv = editor.indicatorEnd(SCE_UNIVERSAL_FOUND_STYLE, ierv) # editor.indicatorEnd() returns 0 if no redmarked text exists # editor.indicatorEnd() returns last pos in file if no more redmarked text beyond the 'ierv' argument value if ierv == 0 or len(ind_end_ret_vals_list) > 0 and ierv == ind_end_ret_vals_list[-1]: break ind_end_ret_vals_list.append(ierv) if len(ind_end_ret_vals_list) > 0: if editor.indicatorValueAt(SCE_UNIVERSAL_FOUND_STYLE, 0) == 1: # compensate for weirdness with editor.indicatorEnd() when a match starts at the zero position zero = 0; ind_end_ret_vals_list.insert(0, zero) # insert at BEGINNING of list if editor.indicatorValueAt(SCE_UNIVERSAL_FOUND_STYLE, ind_end_ret_vals_list[-1]) == 0: # remove end-of-file position unless it is part of the match ind_end_ret_vals_list.pop() start_end_pos_tup_list = zip(*[iter(ind_end_ret_vals_list)]*2) # see https://stackoverflow.com/questions/14902686/turn-flat-list-into-two-tuples accum_text = '' for (start_pos, end_pos) in start_end_pos_tup_list: accum_text += editor.getTextRange(start_pos, end_pos) + '\r\n' if len(accum_text) > 0: editor.copyText(accum_text) # put results in clipboard RTTC2__main() -

Hi Scott,

a nice one - good performance improvement. :-)If you are still looking for performance increase,

a general suggestion would be to use as less global objects as possible within a loop as

the cost of loading global is expensive.

Cache global objects at the beginning of the script.

Creating the tuple list from the beginning should be faster than creating from a flat list.

Meaning do your two indicatorEnd calls and create a tuple from the results which than

gets added to a list.

Maybe a list comprehension to create the accum_text is faster as well - but not really sure

as it would need to call the global object.

All in all I assume this might make it faster up to 3-5%, not sure if it is worth thinking about it.Cheers

Claudia -

not sure if it is worth thinking about it.

I should have posted my before-and-after timing, but really, the “before” was “forever” on my 70MB data file! The “after” was extremely quick, certainly on par with how long it took Notepad++'s Mark feature to redmark my desired text. Therefore performance was rated “very acceptable” for the new version. And that’s really all the performance I care about, so further optimizations aren’t worth it to me. Probably some of those optimizations you suggest would make the code less readable, too, so I’m definitely not wanting to go there (although now I leave myself open to comments on how readable/unreadable the existing code is). :-D

I probably would have written this better the first time around if how these “indicator” functions worked was better documented!

Until Notepad++ natively allows a non-destructive (@guy038’s regex method is destructive…but there is UNDO…hmmm) copy of all regex-matched text, this little script will serve me nicely, now on all files big and small.

-

I have read almost all post but i did not know exactly, what was the problem … however i am continue read this forum and know the new things …[Dissertation Proposal Writing Service](LINK REMOVED)

-

I am in the same situation but regular expression method is not working for me to copy match text.

I want to grab all occurrences in configuration file where first line starts from ‘object’ and immediately second line starts with ‘nat’

object network obj_any

nat (inside,outside) dynamic interface

object network obj-test

nat (DMZ1,outside) static 10.206.49.180

object network obj-192.168.236.200

nat (DMZ1,outside) static 10.206.74.60

object network obj-192.168.236.8

nat (DMZ1,outside) static 10.206.49.183 tcp 8080 80

object network obj-192.168.236.9

nat (DMZ1,outside) static 10.206.49.178 tcp 1002 22

object network obj-192.168.236.10

nat (DMZ1,outside) static 10.206.49.178 tcp 8080 80

object network obj-192.168.236.13

nat (DMZ1,outside) static 10.206.74.58 dnsI wrote regular expression ^object.\R\snat.* to grab both lines

starting with ‘object’ and with ‘nat’ but when I am replacing it with

(?1\1), it is deleting the matched lines. Any dea what could be the correct replace string to keep only matced two lines -

Not sure exactly what you are asking but on your data this seems to work to match it:

Find-what zone:

(?-s)^object.*\Rnat.*But what’s this about replacement? This thread is just talking about matching text, redmarking it, and copying it…so I’m confused about what you want to do…

-

@Scott-Sumner sorry for the confusion. What I want, whatever my regular expression matches, it is two line match (first line starts with ‘object’ and second line starts with ‘nat’). So like my regular expression will catch 100 instances of two lines below in huge file with other data as well and I want to copy that multi-line match.

object network obj-192.168.236.13

nat (DMZ1,outside) static 10.206.74.58 dns‘mark’ is marking all lines but ‘bookmark’ is only bookmarking first line, not second line so I cannot copy through bookmark.

So question is how to copy all instances of multi-line match by regular expression?

-

Have you actually read this thread from top to bottom? If so, have you tried setting up and using

RedmarkedTextToClipboard2.pyabove? If I’m understanding your need correctly (still have my doubts) it seems as if that would solve the problem… -

@Scott-Sumner I will try this script. But without script, is it possible to copy multiple instances of matched result (that is multi-line) by regex in a text file?

-

Ummmm, well…No…that’s why the script was developed in the first place…seems like this should be obvious from the earlier postings in this thread…

-

Hey all,

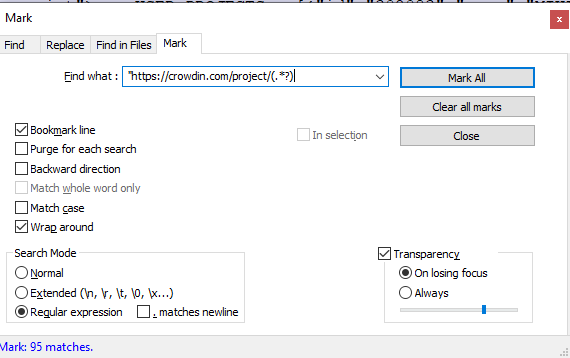

I know this topic is quite old, anyway decided to share solution I’ve discovered (I’m not so technical, so Python script isn’t the option for me). So, long story short, I’ve extracted a long JSON response and needed to copy 95 URLs from it only and ignore everything else. Like in @Suncatcher’s case, everything was stored in a single line.

So, I did the following:

-

Search for

https://site.com/project/(.*?)regexp and replace all matches with\r\nhttps://site.com/project/$1\r\nso URLs were moved to separate lines; -

Afterward, switch to “Mark” tool, check “Bookmark line” option and mark all

https://site.com/project/(.*?)

-

Finally, click “Search” menu => Bookmark => Remove unmarked lines

That’s it, list of necessary items only (URLs in my case) was created 🎉 My case is easier comparing to topic’s author, anyway hope this will be helpful for someone in the future, cheers!

P.S. @guy038 thanks for mentioning Bookmark feature, I’ve never used it before and it’s super helpful

-