Fix corrupted txt file (NULL)

-

@pnedev said in Fix corrupted txt file (NULL):

and this is exactly what I had written before

So I could have spent my time with something more productive,

like watching TV and eating chips ;-)

At least I learned something new :-)I thank you for your contributions to npp,

hopefully @donho will reconsider his opinion. -

So I’m back to this thread, hopefully without a pure garbage contribution this time…

@Ekopalypse said in Fix corrupted txt file (NULL):

So I could have spent my time with something more productive,

like watching TV and eating chipsI think your analysis and “market comparison” was valuable and an interesting read. Put the chips down and turn off the TV.

So I did a little research and read about how the proposed fix to the issue was rejected because it had too much risk of introducing a regression.

I find that a tad bit ironic because isn’t a text editor that can lose data by corrupting hours of someone’s work already in a “regressed” state?

So what’s the future on this?

Don’s rejected a fix once. AFAICT Don doesn’t keep his “finger on the pulse” of current user concerns (i.e., doesn’t monitor here, doesn’t communicate with people via email about Notepad++). With some of the limited back-and-forth I’ve seen with him in issue comments on github there seems to be a language-barrier problem as well when the communication is in English.

I just don’t know…

-

You are more than welcome.

I thank you too for your great analysis. I find your comparison very interesting.

Next time just keep the TV ON and the chips on a hand distance so you can combine your favourite activities ;) And make sure to save often ;))BR

-

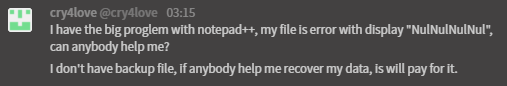

Another user with this problem has been reported today on the “Live Support” channel:

“the big problem with N++”…indeed.

-

I have the same problem. Recuva and other programs did not help. I think data recovery companies can help. How do you think? That file is very important to me.

-

Hi @ben and others

were you able to resolve this issue? any softwares that can be used? I have the same issue and need to recover a file. using Recuva i have similar experience as others.

please help me if anybody has resolved this. thanks -

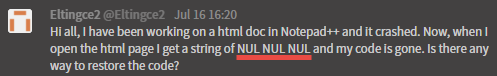

I suppose I will just keep echoing these here as I happen to notice them, using this thread as a “rallying point”:

-

@Alan-Kilborn

I don’t think there is a solution for this. I have been looking for it for the past week. Nobody has a working solution. I see NULNUL in my notepad++, if i open it in notepad I see blank file, I was not sure of the original size of the file, but it shows as 13kb which might be smaller or of the same size, if I open it in sublime txt editor it shows all 0000 0000 entries for about 1000 lines. so I guess it may be of the original size. No recover from backup file option, recuva does not help. Not sure if it is due to system crash or malware correupted the file. This is the only file that got corrupted as it was last saved before the crash, none of the other opened up notepad++ files in the same session did not get corrupted. All other files in the backup folder I am seeing it, as I have this file saved in a different location than the notepad++ backup file location. If there is a working proven software I can pay for and will fix this, I will buy that. I do not see anything that is available for txt files. -

@general-purpose said in Fix corrupted txt file (NULL):

I don’t think there is a solution for this.

From my somewhat limited knowledge, I agree.

Well, except the solution is to prevent it in the future.

But the change to the s/w for that has been declined, so…If there is a working proven software I can pay for and will fix this, I will buy that.

Well, this would presume that the data still exists.

If it doesn’t (which I would suspect to be true), sadly nothing can recover it. -

I am not sure about that but I suspect the file contents might still exist somewhere on the disk.

I had been thinking about the NUL issue before when I made the fix.

Why is the file showing only NULs?

You see, when you save the file, no matter if it is immediately written or partially written or not written to the disk at all, when opened after the crash the file should show some sane data + some corrupted portions. This is not the case.

One reason would be that the file is wiped (written as all NULs) before the actual write to the disk. This is unlikely however because it contradicts the very reason why the actual content is not immediately written to the disk. Why first write NULs and then write again the new contents?The second reason is the file location.

What we know about the file is its name. But from system point of view it is just an address to the file content. The system keeps in its file system a register - the correlation between the file name and where on the disk its content resides. Now when we save, the name is kept the same, the new content is supposedly written to the disk BUT THE PLACE WHERE IT IS WRITTEN MIGHT DIFFER FROM THE PREVIOUS ONE. In other words the address of the file is changed. This means that the register containing the correlation name - content needs to be updated as well (in the file system itself).

Now imagine that you save the file, the new address is assigned and updated in the file system (so your file name points to the new location on the disk) BUT your new file content is still not flushed to the disk. The system crashes, you restart, open your file and what you see is the content on the new location (which has some random data or all NULs for example).

In that case your file old content is perhaps still somewhere on the disk but the correlation between the file name and where that location is is gone.Software like Recuva might help by trying to find where that previous location was before the save and the crash but unfortunately it will not always succeed.

As far as I remember there are some options you need to set in Recuva to maximize your chances for success but I can’t remember them for sure.

If you haven’t done that already, go through Recuva settings and turn on all of them that imply something like “deep” or “thorough” or “aggressive” scan. It will give you more results you need to check yourself but it might help.

If it doesn’t help then perhaps there is nothing else you can do.Good luck!

-

@pnedev

I agree with your analysis and reasoning. Thanks for the detailed response. I used Recuva with deep scan. It did not help. As you said nothing much to do at this point.

lessons learnt. -

And at last, as a safety measure, I started using google drive backup and sync. I am a PHP developer, so all my files to be edited are in htdocs folder. gdrive backup and sync monitors the folder for file changes and starts to upload the file to drive as soon as the file contents are changed or the date modified is changed, I don’t know which one it is. But this has been serving me well.

-

How many people does it take?

It has happened to me not once, not twice, but now three times in the past 4 months, and the last most critical.

- First time was when saving previously unsaved NPP files I had open for a computer repair… saved with a name, but when I restored the files later, the file I saved it as was only nulls. Figured it might be related to the backup writing or such, so didn’t think much of it, but lost some important life lessons I’d typed up.

- Second time was an entirely unsaved NPP file I’d put some complex commands into for FFMPEG that I had worked out while I wrote together a more flexible processing script. Lost hours of fiddling that got the right settings, but figured because I hadn’t been saving at all, it was my own fault, and got in the habit of saving constantly.

- And now the third time… lost 2 weeks of heavy work put into a main script for a new business I had been passionately working on. This time it was a saved file. I had saved it maybe 15 minutes earlier, and hadn’t edited it since, just hadn’t closed it.

Lost probably 50 hours of work. Tried Recuva/deep scan/dump search/etc.

Killing me. It was a saved file.

I am guilty of cold killing the computer with the power button when sometimes the system becomes unresponsive (perhaps due to heavy Opera/ffmpeg/etc usage… but more likely the frustrating Windows/antivirus background processes).

And I am guilty of not backing up constantly. I did backup the folder a couple weeks ago… just before I started writing this specific script. I was putting in brutal hours to get the videos processed and other key aspects of the new venture done in time, and hadn’t had the time to setting up a more robust backup solution yet. That said… if this keeps killing people who know to do backups… how many amateurs/students/etc will this decimate before it’s considered important enough by @donho and others to make it a priority?Definitely looks like it’s write caching doing us all in. I’ll turn it off, but it’s a reactive measure other users won’t know to do (and a chore to remember every install, every machine, in addition to the costs of such action).

If the Win32 API use would endanger things by being a regression, I still don’t understand why some intermediate option such as comparing the backup file to the buffer version before going ahead with overwriting the main file (to at least prevent back dataloss), or verifying the backup version has changed, or that it is not a corrupted/null filled, before overwriting the current file on restart aren’t viable??? Maybe such a fix would slow the execution down a bit, but the value of being able to rely on your editor not to overwrite what may be vital files seems beyond compare.Or else, if we’re not going to have reliable backups, just scrap the whole backup setup. Because when you need it most, it fails… and to even overwrite previous files… it’s awful. That any file you edit in N++ may not only lose unsaved work, but overwrite the existing file? Begging and pleading it becomes a priority. How many more disaster stories are needed!?!

Looks like there has been 1 minor and at least 6 patch releases since the work at solving this. I understand the developers are doing this of their own kindness and selflessness… but what other updates are more vital???@pnedev, thank you so much for your persistence on the issue, I see you reopened the commit, and have pleaded with don to make it happen. I really hope it comes through. Too late for me (this time), but hopefully your work will save many many thousands of hours of people’s lives.

-

@Shane-Young said in Fix corrupted txt file (NULL):

How many people does it take?

Even an infinite number of people reporting the problem here in the forum will likely do nothing.

The only official bug and issue tracking happens on GitHub. Comment on the official issue tracker – for example, in the still-open issue #6133 – begging that something be done.

But complaining about it here will do nothing. Every regular in this forum that has expressed an opinion agrees that something needs to be done to fix it – but all the agreeing opinions in the world expressed in this forum will do nothing to fix the problem. Our best bet right now is @pnedev’s fix, but the only hope for that is to convince Don that his fix won’t break other things.

-

Just merge the fix of @pnedev into master:

https://github.com/notepad-plus-plus/notepad-plus-plus/commit/a1031517742c7ef600b825bf9f193b3f2740dff6The fix will come with v7.9.1.

Thank you @pnedev for your work! -

Hi guys

Thanks to the investigation & implementation of @xomx , we have identified a plugin which could cause this issue eventually: “SaveAsAdmin”.

ref: https://github.com/notepad-plus-plus/notepad-plus-plus/issues/14990#issuecomment-2053828749While browsing this very long discussion of the issue, I found no Debug Info regarding files corrupted NUL characters issue.

So for people who have encountered NUL characters content problem, could you confirm (or deny) that you have had SaveAsAdmin plugin installed?

-

@wisemike2 Might be Windows OS issue, not Notepad++.

I have PNG files overwritten with NULL bytes as well as TXT files at the same time.

(no virus, checked).

The TXT files were the currently opened in Notepad++.

And PNG files were opened by OS (a background image for windows, and an image created and being open with Snippet Tool).

Since Notepad does not touch png files, it might be a coincidence under circumstances that some windows process ruins the file currently in use or on power on/off or saving.

As always with Windows, it is hard to debug.

Just shared my observations. -

It will be easier for others on the forum to help you if you share debug info (

?->Debug Infofrom the Notepad++ main menu).I seem to recall that the specific problem of Notepad++ overwriting files with a lot of

NULcharacters was fixed a long time ago, but I’m not super familiar with this topic.