Find a URL that is unique

-

PLaying around with internet archive’s awesome saving mechanism - saving pages by emailing to savepagenow@archive.org , I’ve come across some issues with saving tweets on twitter. There is a random chance that saving [https://twitter.com/<Username>/status/<ID number>] could redirect to end up saving [https://api.twitter.com/2/timeline/conversation/<TweetID>.json?<long string of commands>] instead. This can be seen by looking at the list the email is sent back to you with unfamiliar URLs you didn’t submit.

Sadly, notepad++'s compare tool didn’t have a feature that filters out only differences, so if a ton of URLs were to be redirected, I would’ve tediously to manually scroll down a list, copy URLs that were “missing” (marked red because it was replaced with the redirected link).

So I thought, by having the list of URLs I am intending to save, and taking the email sent back to me, copy the list from the email, paste in notepad++ where I have my sent links, and do this:

Find what: [https\:\/\/web\.archive\.org\/web\/[0-9]*\/] Replace with: [] (nothing)and then perform a find that searches for URLs that only exist once, will allow me to find URLs that I submitted that were redirected off (because I have both copies, the submitted links list and the list sent back to me, so non-redirected URLs will appear twice). But how do I do that?

-

My advice would be to sort the list (presumes they are one per line but you do not say) and then use the command to remove consecutive duplicate lines. What is left should be unique.

Sadly, notepad++'s compare tool didn’t have a feature that filters out only differences,

I don’t know of ANY compare tool that detects duplicate lines.

-

I’ve already tried that (I usually prefer one URL per line), but it does not filter out links that exists once, all this does is to tell notepad++ to REMOVE all duplicate lines, but not SEARCH for links that existed once. The compare tool extension merely just highlights text that it detects a change. What I am trying to do is use the “find” function to look for URLs that only exists once. Here is an example:

https://google.com https://facebook.com https://twitter.com https://community.notepad-plus-plus.org/ https://google.com https://facebook.comWhen CTRL+F, and find all, it should show this in the result:

https://twitter.com https://community.notepad-plus-plus.org/Because those two URLs only existed once, while https://google.com and https://facebook.com are duplicated.

-

Ah, maybe it’s me, but it was unclear that you wanted that.

In that case, sort the lines as before, make sure the last line of your file has a line-ending at its end, and then:

Open the Replace dialog by pressing Ctrl+h and then set up the following search parameters:

Find what box:

(?-s)^(.+\R)\1+

Replace with box: Make sure this box is EMPTY !

Search mode radiobutton: Regular expression

Wrap around checkbox: ticked

. matches newline checkbox: doesn’t matter (because the(?-s)leading off the Find what box contains ansvariant)Then press the Replace All button.

-

Testing right now. Just sent emails containing links. I’ll let you know when I get a reply back. The IA is under maintenance, so delays may affect when I get the message back (normally 5-15 minutes after sending the email I get a reply)

-

This post is deleted! -

@Alan-Kilborn

It worked! Thanks! Also, in case if anyone reading this don’t know what this means:

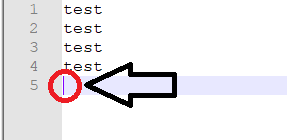

“make sure the last line of your file has a line-ending at its end”,

that means you make a line break after the last character so that the last line is blank:

I’ve also find that twitter also redirects to this:

[https://twitter.com/i/js_inst?c_name=ui_metrics]