Rookie question: how do I display a Unicode-form file with Unicode-control line-breaks?

-

I’m a novice here, and haven’t found the answer through my own research.

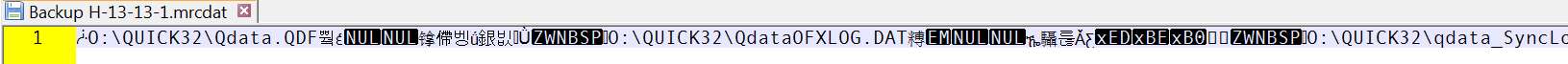

I have a file that appears to be in 2-byte Unicode form. I don’t know the actual internal details, but it definitely is 2-byte form.

It appears that the end-of-line break character used is ZWBNSP, i.e. Unicode Character (U+FEFF), Zero Width No-Break Space (BOM, ZWNBSP)

I would like to use Notepad++ to at least display (if not also edit) this file, with the QWNBSP characters terminating each “line” triggering NPP to display each line one at a time, same as an ordinary text file or similar.

What options or preferences or “style” tweaks must I apply, in order to be able to see the “lines” of this Unicode file exactly one screen line per file line, with QWNBSP delimiting each line?

Is there a HELP or FAQ I can reference?

-

If you open a file in Notepad++ and it shows

NULcharacters, use a different text editor to work with that document. Notepad++ has no problems with UTF-16 files in general, but any UTF-16 file that contains a literalNULcharacter (as opposed to a zeroed-out second byte of an ASCII character) is guaranteed to cause certain problems with Notepad++, most notably causing many plugins to truncate the document at the firstNULcharacter.

Why? In many parts of the Notepad++ codebase (for example, theSCI_GETTEXTScintilla method that a plugin can use to read the currently open file), Notepad++ treats files asNUL-terminated C-strings. I have tried unsuccessfully to add a feature to Notepad++ that would warn users when they work with such files, but Don Ho would rather let users get blindsided by these issues. -

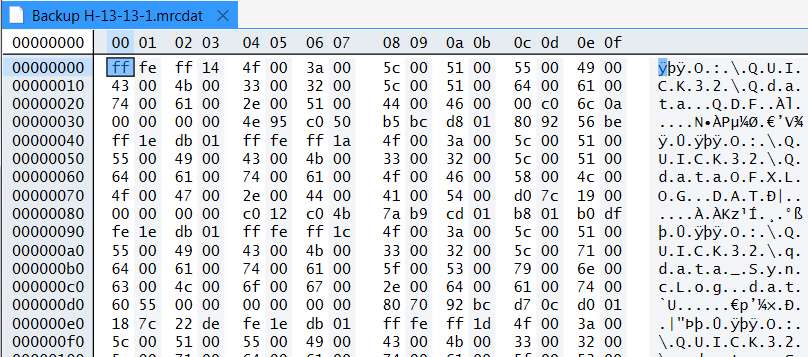

@DSperber1 A file that starts out with FF FE like yours is most likely interpreted as UTF-16 with a Little-Endian Byte-Order-Mark. If you load the file using Notepad++ then you should see

UTF-16 LE BOMat the right side of the status line at the bottom of Notepad++.If a UTF-16 LE BOM encoded file started out with upper case ABC and then a CR/LR newline followed by DEF then the file would contain

ff fe 41 00 42 00 43 00 0d 00 0a 00 44 00 45 00 46 00 0d 00 0a 00The first line with

ff feis the UTF-16 LE BOM thing.

The second line are the letters A, B, and C followed by a carriage return (0d 00) and then a line feed (0a 00).

The third line is similar but has the letters D, E, and F.That’s what a UTF-16 LE BOM encoded file is supposed to look like.

The first character in your file is

14ffwhich seems strange. After that though is normal UTF-16 LE BOM encoded Unicode “O:\QUICK32\Qdata.QDF”. Immediately after the final F is C000 0A6C and then 0000 0000 which is not expected for a UTF-16 LE encoded text file.Your file is more likely what’s known as a binary format file and should NOT be treated or edited using an editor that deals with normal UTF-16 LE files.

I see that the file extension is

.mrcdatGoogle says that’s a Macrium Reflect log file. There’s apparently a utility known as mrcdat2txt that can convert your.mrcdatfile into a text file you can edit with Notepad++ or a CSV file you an load into a spreadsheet. -

@DSperber1 said in Rookie question: how do I display a Unicode-form file with Unicode-control line-breaks?:

What options or preferences or “style” tweaks must I apply, in order to be able to see the “lines” of this Unicode file exactly one screen line per file line, with QWNBSP delimiting each line?

I had to run out the door to go look at the comet (which was fantastic) as I was writing/posting my previous reply. I deal with situations such as making a

ZWNBSPa line break by using search/replace in Notepad++'s Regular Expression mode.

Search:\x{FEFF}

Replace:\r\n

The\x{FEFF}will matchZWNBSPs in the file other than the very first one which is the file’s Byte-Order-Mark. The replacement,\r\n, is a carriage return and line feed. It’s ok if you don’t know the hex code for something like aZWNBSPon the screen. Just select the character and then doCtrl+Hto open the search/replace dialog box. The Search field will get auto-filled with the selected character.If you want to get rid of all the funny looking characters then do

Search:[^\x20-~\r\n]+

Replace:\r\n

That will replace nearly anything that is not a plain ASCII space (\x20) to tilde (~ or \x7E), CR, or LF with a CRLF end-of-line mark. For my own projects I nearly always also allow tabs to remain and so use[^\x20-~\t\r\n]+to look for characters that are not plain ASCII.You should do this on a backup of your file as you will be destroying the file’s usability as a Macrium Reflect log file.

Notepad++ sort of works with files that contain NULs like your file. Basic editing works. However, many operations such as copy/paste will get truncated at the NUL. The copy/paste truncation is a Windows issue, not Notepad++. Other operations such as case conversion and sorting will also get truncated at the NULs as Notepad++ uses standard string libraries to do the work and those libraries work with NUL terminated strings. Notepad++'s search can’t be used to find NUL characters nor can you replace something with a NUL. However, you can use

[^\x20-~\t\r\n]+to get rid of NULs along with other non-plain-ASCII.The

[^\x20-~\t\r\n]+trick will not work on extended Unicode characters in the range U+10000 to U+10FFFF. There’s no easy workaround for those characters. Extended Unicode used to be uncommon but modern emoticons are in that area. -

Infinite thanks!!!

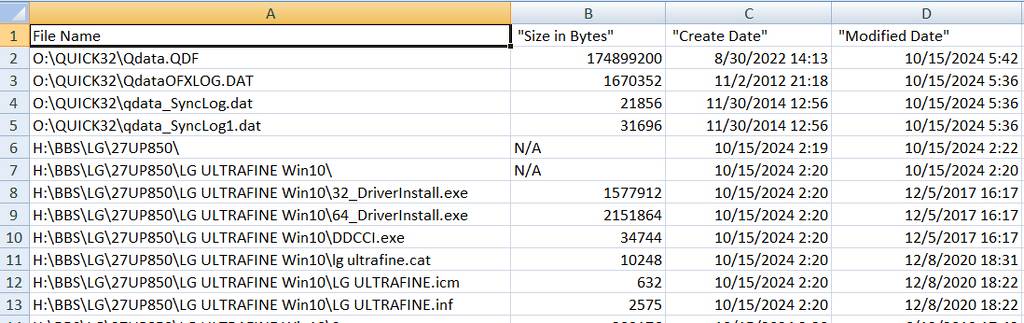

Yes, this MRCDAT file IS a Macrium Reflect backup detail log file that contains the fully-qualified names (and the key related directory data of file size, creation date and modified date) of the files written to its folder/file MRBAK file.

And what I really wanted was EXACTLY what is produced by using the MRCDAT2TXT.exe utility program (which I knew nothing about until you mentioned it). The input to the utility is exactly the MRCDAT file, and the output is a CSV-format text file.

The existence of MRCDAT2TXT.exe available for download on the Macrium site saves me the trouble of writing this exact program myself! It really is exactly what I wanted. I wanted to be able to know the fully-qualified path and file names of every file written to the MRBAK file.

I really have no intention of editing or manipulating the MRCDAT file itself, which really isn’t a line-oriented text file. It’s truly a Macrium Reflect data file, that just happens to contain exactly what I’m looking for. I just needed to be able to read it and then transform it into a “script”, invoking the ATTRIB command, to reset the file properties “A” flag in the directory entry for each and every file which got backed up.

PERFECT!

A million thanks.

-

Hello, @DSperber1, @mark-olson, @mkupper and All,

@@DSperber1, I’m pleased that you’ve got the right program (

mrcdat2txt.exe) to edit your.mrcdatlog files :-))

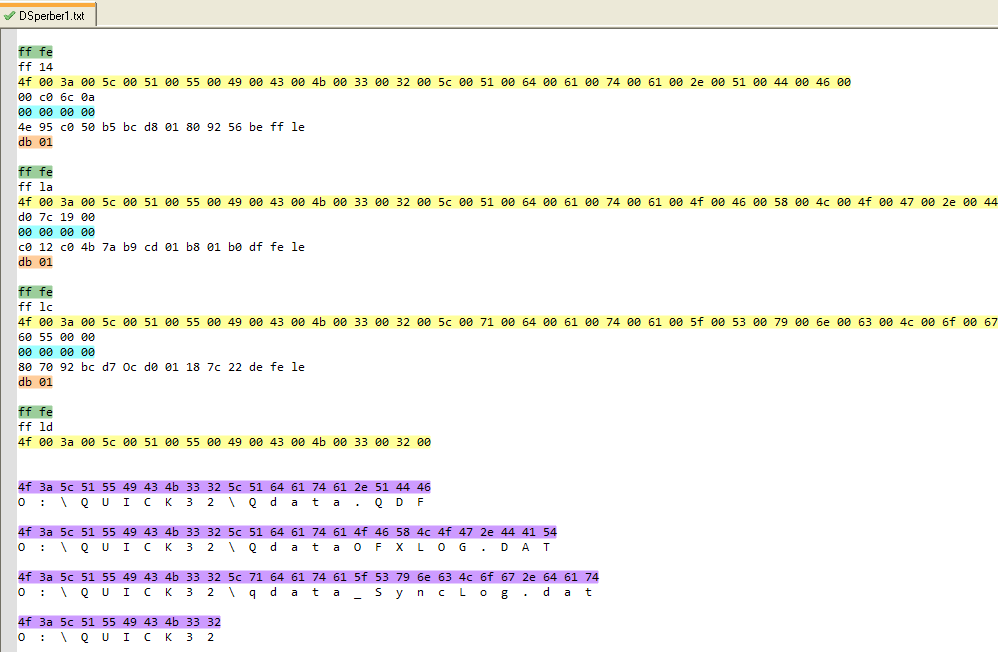

Now, before your last reply, I tried to guess the similarities between some parts of your

.mrcdatfile and, indeed, I’ve found some !As you can see on the picture below :

-

Each row of your table seems to begin with the

FFFEsequence -

Each row of your table seems to end with the

db01sequence -

A four zero-byte sequence occurs in middle of each section

-

The Yellow sequence looks like a valid

UTF 16 LE BOMsequence of characters. Thus, to my mind, I thought to get valid strings after suppression of allzerobytes, in these lines …

… And bingo ! You can verify that each Mauve/Pale Violet line, below, correspond to the

File Namepart of each line of your table !

Best Regards,

guy038

-