Standard ANSI and code still change to something else

-

Hi

i use v8.8.7 on windows 11 pro v 25H2.

when i right click to create new txt, press enter and hereafter double click the txt file opening it i NPP, its ANSI.

When i enter some text and save its saved in ANSI as supposed.

But when i enter danish letters æøå and save its suddently saved in something else, eg windows 1255.

It shows encoding characterset Hebrew which is wrong, it should not show anything

Why is that ?

How to always create and save in ANSI ?

thanks

Nolan

-

“ANSI” is the American National Standards Institute. One of the things they did during 80s-era computing was define different encodings that put various character sets into the 256-character page limit of an 8-bit character. At some point, computer newbies confused the organization that defined them with the encodings themselves, and the world was stuck with incorrectly calling all those 256-character encodings “ANSI”, even when referring to the 8-bit encodings that were specific to Microsoft’s DOS and later Windows operating systems.

When doing encodings for the “Windows” GUI OS, MS thoughtfully named their encoding standards as “WIN-####” or “Windows ####” (people write them both ways). For example, Windows 1252 is the Microsoft encoding that’s nearly identical to ISO-8859-1 (ISO = “International Standards Organization”, an international body who makes standards, like ANSI is US-specific). Windows 1255, which you mentioned, is an encoding for Hebrew.

When Notepad++ says “ANSI”, what it means is, “using whichever 8-bit encoding your installation of Microsoft has set as the default character set / codepage” (which gets even more confusing now that you can confuse things by telling the OS to use 65001, which is the UTF-8 codepage, which causes many unexpected bugs, since Notepad++ is not expecting multi-byte characters when in ANSI mode).

But anyway, when you save a file, Notepad++ writes those bytes to disk based on the default Windows encoding; but the OS does not save any metadata about the encoding of the file (back in the DOS days, FAT and FAT32 didn’t have enough space to store such metadata; and when MS made the NTFS for NT, they could have added in metadata like encoding, but chose not to) – but that means, when any application, Notepad++ or otherwise, reads the file later, they have no way to know for sure what encoding was used for a given “text file” based on the information in the file itself or based on non-existent metadata. As a result, Notepad++ uses a set of heuristics to guess, based on byte frequency and byte sequences, what encoding it probably is. But it often guesses wrong, which is why my recommendation is to always turn off Settings > Preferences > MISC > Autodetect character encoding: assuming that the majority of “ANSI” text files you are reading are made by you on your same computer with the same default codepage/encoding, you shouldn’t need Notepad++ to “guess” what encoding it thinks it is: you can just let it always apply the Windows default encoding when it reads the file.

Or you could do something that brings you into this century, by using the UTF-8-with-BOM or one of the UTF-16 encodings, any of which will unambiguously be able to encode any of the 160k or so characters defined by Unicode – which allows you to mix characters from across the world without any ambiguity of 1980’s style 8-bit encodings. If you have a choice in your data, choose UTF-8 or UTF-16; if you have no choice, complain to whoever is not giving you the choice that they are hindering efficiency by forcing you to continue to use outdated 1980’s character sets instead of a modern encoding built to interface with the whole world.

-

@PeterJones

Thanks i disabled auto detect just in case, but what i observed now was after a reboot it seemed to work as expected again.What ever the reason for this was, i don tknow.

The reason i use ANSI is the following:

i use Danish Windows 11 Pro 25H2 ie the latest version, and if i create a txt file with the build in notepad.exe application which uses UTF-8 and write the danish characters æ,ø,å then windows search nor copernic desktop search can find any file with letters æ,ø,å because its interpreted as letters not being æ,ø,å. This i assume is dictated by the OS, which is from 2025. If i save txt files in ANSI windows search and copernic perfectly finds txt files with the letters æ,ø,å, but not if saved in UTF-8.

This is both annoying and very weird.

Saving in ANSI seems not a proper solution more of a work around.

It does not make sense to have to use ancient ANSI to make this work and it seems contradictionary that the OS (via native notepad.exe saving in UTF-8 format) does not read correctly UTF-8, when notepad.exe saves in UTF-8.

Any explanation or proper solution to this ?

best Nolan

-

@NolanNolan said in Standard ANSI and code still change to something else:

@PeterJones

Thanks i disabled auto detect just in case, but what i observed now was after a reboot it seemed to work as expected again.Glad that helped.

… windows search nor copernic desktop search can find any file with letters æ,ø,å because its interpreted as letters not being æ,ø,å. This i assume is dictated by the OS, which is from 2025.

I guesss I’d never tried using Windows search to look for UTF-8 characters. That’s really annoying if they don’t handle that right. You’d think Microsoft would’ve figured that out long ago.

This is both annoying and very weird.

Understandable.

Any explanation or proper solution to this ?

Sorry, I have no insight into the OS level searches.

Nor do I have a proper solution. But, as an alternate workaround, instead of using Windows Search, use Notepad++'s Find in Files to search your files for UTF-8 characters? ;-)

BTW: You didn’t need to make that post twice. As the form tells you: until you have enough reputation/upvotes, you need to wait for a moderator to approve your post, so it won’t be visible immediately, so that’s why you couldn’t see your post. However, it looks like you now have enough upvotes so that your posts will go thru without moderator approval, so you shouldn’t have to wait for the post queue any more.

-

@NolanNolan said in Standard ANSI and code still change to something else:

use Danish Windows 11 Pro 25H2 ie the latest version, and if i create a txt file with the build in notepad.exe application which uses UTF-8 and write the danish characters æ,ø,å then windows search nor copernic desktop search can find any file with letters æ,ø,å because its interpreted as letters not being æ,ø,å. This i assume is dictated by the OS, which is from 2025. If i save txt files in ANSI windows search and copernic perfectly finds txt files with the letters æ,ø,å, but not if saved in UTF-8.

Try saving (whether in Notepad or Notepad++) as UTF-8 with BOM. In the absence of a byte order mark, Windows assumes files use the legacy code page associated with the system locale.

-

@PeterJones

thanks Peter, your help was again very helpful and insightfull, much appreciated :-)best Nolan

-

Yes you are indeed right, i just picked ANSI as the first option which was a work around solution for windowsx search to find æøå as content in txt files, but tested UTF-8 BOM and this format also works but UTF_8 without BOM does not work. Thanks for your suggestion, that will be a more modern and my default code from hereon.

But really weird that using Microsofts own notepad.exe that comes with a standard windows installation makes windows search not detect characters in txt files that belongs to the installation language of the OS.

I have also tried to find a solution to set the txt coding, system wide in the OS, but couldnt find any. SO i guess the way to go is to default UTF-8 BOM through the NOtepad++ app (by the way this even cant be set in the native microsoft notepad.exe app)

Thanks :-)

best Nolan

-

i now changed the coding to UTF-8 BOM, assuming this sets the default for all new txt files, but new files are still created as ANSI, when i right click empty space in file explorer and create new. But when i open NOtepad++ as an app it is opening with default UTF-8 BOM as expected, have i missed something regarding a setting ?

best Nolan

-

Notepad++ auto-detects encoding based on the characters you type. When you enter Danish letters like æ, ø, å, these aren’t part of standard ANSI, so Notepad++ switches to a code page that can support them (like Windows-1252 or sometimes misdetects as 1255).

To always save in ANSI:

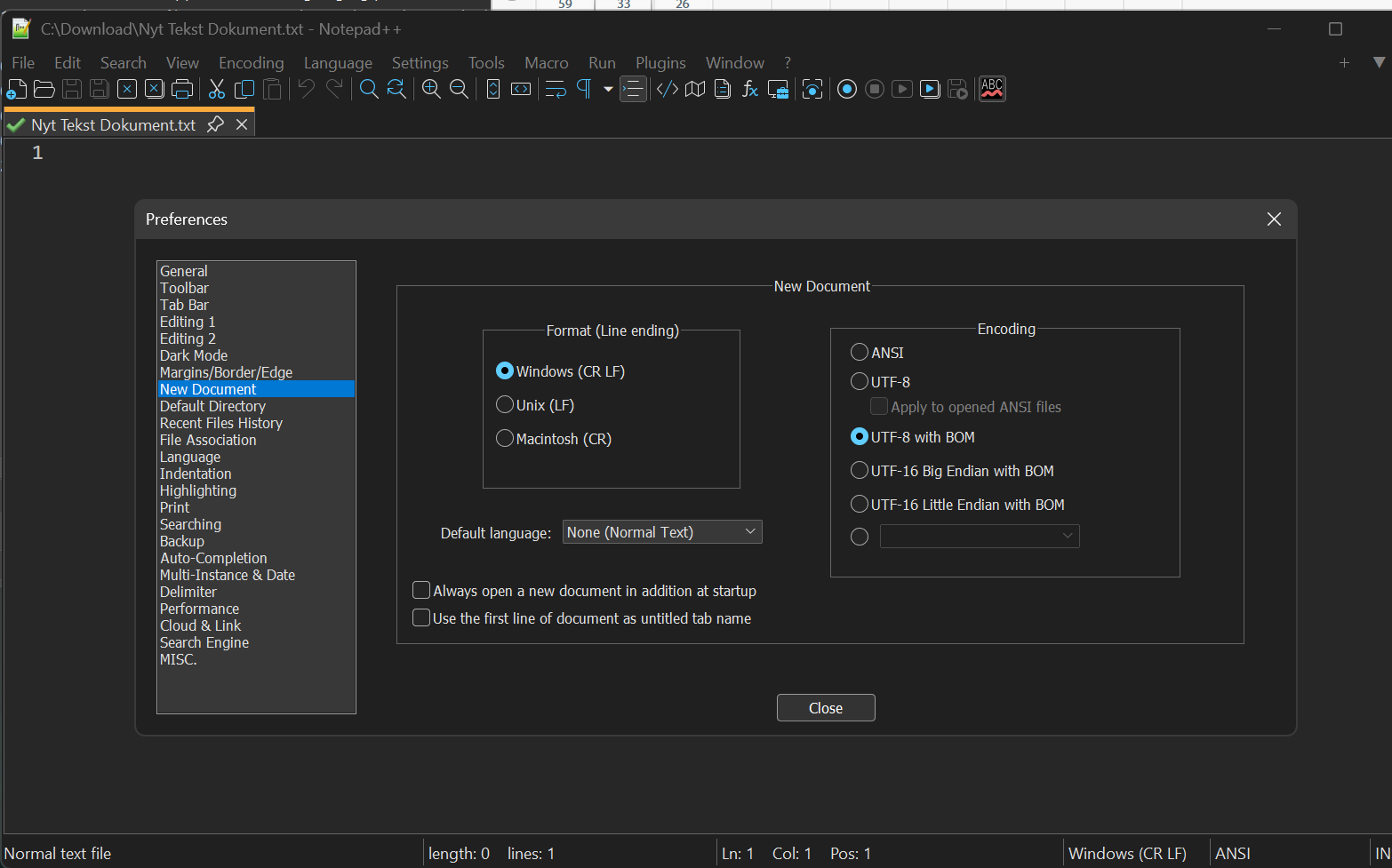

Go to Settings → Preferences → New Document → Encoding.

Select ANSI as the default.

Check “Apply to opened ANSI files” if available.

Note: Some characters (like æøå in certain ANSI pages) may not display correctly in pure ANSI — using Windows-1252 is safer for Western European letters.

This ensures new files default to ANSI, but remember Notepad++ may still switch if characters aren’t supported in that code page.

-

@NolanNolan said in Standard ANSI and code still change to something else:

i now changed the coding to UTF-8 BOM, assuming this sets the default for all new txt files, but new files are still created as ANSI, when i right click empty space in file explorer and create new.

When you create a new .txt file from Windows Explorer | right-click | New | … Windows creates an empty file with the .txt extension.

By definition, UTF-8 with BOM means a file that begins with a UTF-8 byte order mark. An empty file, of course, doesn’t begin with anything, so it can only be either an ANSI file or a UTF-8 (no BOM) file.

That all makes perfect sense if you think like a computer, and no sense if you think like a human being.

If you are brave, it is possible to change this behavior by editing the registry. (If “edit the registry” means nothing to you, I’m going to suggest that you stop right here and avoid potentially messing up your system. If you know how to edit the registry, read on.)

First, open Notepad++ and save a new, empty file as UTF-8 with BOM using a name with a .txt extension. Choose a place to store it where it won’t be disturbed. Remember the full path and name of the file.

Open regedit and locate

HKEY_CLASSES_ROOT\.txt\ShellNew. Delete the valueNullFile. Create a new String Value namedFileName, then edit it to set its value to the full path and name of the file you saved.Now, when you create a new .txt file, instead of being empty, it will contain a UTF-8 byte order mark.

Reference:

https://learn.microsoft.com/en-us/windows/win32/shell/context#extending-the-new-submenu -

thanks, yes that worked perfectly, although it seems not to use the full path just the filename is to be used

thanks again :-)

best Nolan

-

Thanks Thomas

who would have thought it could be so cumebersome to make a simple txt file work in modern windows

Thanks again :-)

best Nolan

-

@Thomas-Anderson said in Standard ANSI and code still change to something else:

Notepad++ auto-detects encoding based on the characters you type.

Please don’t make false claims like that. It doesn’t help anyone.

When you enter Danish letters like æ, ø, å, these aren’t part of standard ANSI,

You really don’t understand encoding. You probably shouldn’t be giving advice in such a conversation. (Update: Specifically, as I described above, “ANSI” is a misnomer, and when Notepad++ is using ANSI, it’s really using the default codepage for your installation of Windows, so for some people, who have set Windows to a Danish localization, or another localization that uses a Dutch-compatible character set, the “ANSI” selection in Notepad++ will know the Danish letters.) (Update 2: Besides, Windows-1252 encoding does have æ, ø, å, at codepoint 230, 248, and 229,respectively. And since Windows-1252 is what the vast majority of US and Western Europeans have their Windows set to accommodate, “ANSI” for all of those people will include those characters.)

so Notepad++ switches to a code page that can support them (like Windows-1252 or sometimes misdetects as 1255).

That’s not what Notepad++ does. Update: it follows the settings, as described in my post above, when you create a new file, regardless of what you type; however, when you open an existing file, it will use heuristics to guess the encoding, but that has nothing to do with typing.

To always save in ANSI:

Go to Settings → Preferences → New Document → Encoding.

Select ANSI as the default.

Check “Apply to opened ANSI files” if available.This proves you don’t know what you’re talking about. The

Apply to opened ANSI filesis only available for the UTF-8 option.Note: Some characters (like æøå in certain ANSI pages) may not display correctly in pure ANSI — using Windows-1252 is safer for Western European letters.

And if you tried to enter one of those characters while in a file (new or otherwise) under Notepad++'s “ANSI” setting, it would show up as a

?, not as the character. Which proves both your statement here, and the line above where you claimed Notepad++ changes encoding as you type, to be completely fallacious and false and misleading.This ensures new files default to ANSI, but remember Notepad++ may still switch if characters aren’t supported in that code page.

Wrong.

Nearly everything you said in that post is wrong.

Based on this, and the other posts you’ve written, I am coming to the conclusion that you are violating this Forum’s requirement that posts be human-generated, not bot/AI/GPT/LLM-generated. Your posts have always sounded to me like they are LLM/GPT-generated, and this one has pretty much clenched the deal. Please stop spreading AI nonsense. (And if you aren’t using AI, then me believing that you are using AI should tell you something about the quality (or lack thereof) of your posts.)

-

@NolanNolan said in Standard ANSI and code still change to something else:

But really weird that using Microsofts own notepad.exe that comes with a standard windows installation makes windows search not detect characters in txt files that belongs to the installation language of the OS.

Perhaps not quite as strange as it might first appear.

Support for Unicode in Windows dates back to the first release of Windows NT in 1993. (NT was a “business” operating system; it took another eight years or so to get Unicode into “consumer” systems.) The thing is, Windows chose to support 16-bit characters: UCS-2, which later became UTF-16. UTF-8 wasn’t even presented publicly until 1993, and it took many more years for it to become popular. Most early adopters of Unicode, like Windows, used 16-bit “wide” characters.

So, for a long time, in Windows “Unicode” meant UTF-16. Windows XP (2001) introduced code page 65001 for UTF-8, but it was only useful in conversion functions and console sessions. In Windows 10 Version 1903 (May 2019), it became possible to set UTF-8 (65001) as the system code page; however, that doesn’t (yet, in 2025 at least) do as much as you might hope it would, and it can precipitate odd behavior in software. (I tested your specific case: setting Use Unicode UTF-8 for worldwide language support does not change how search in Windows Explorer interprets files without a byte order mark.)

Files using legacy (“ANSI”) encodings are too common to ignore, but, as @PeterJones pointed out in his earlier post in this thread, there is no completely reliable way to distinguish an “ANSI” encoding from UTF-8. Windows chose to use the byte order mark (already in use in UTF-16 files) to signal when a file is UTF-8. Windows simply does not recognize a file without a byte order mark as Unicode.

Notepad++ uses byte order marks, too, but it also recognizes when a file has a very high likelihood of being UTF-8 (without a byte order mark). This is possible because the details of UTF-8 encoding make it highly unlikely that a legacy text file will “accidentally” also be a valid UTF-8 file — unless it is very short, has been intentionally crafted to trigger false detection, or contains only ASCII characters. (Since ASCII characters are represented identically in UTF-8 and in legacy code pages, the last case only matters if you edit a file which contained only ASCII characters so that it contains one or more non-ASCII characters. In that case, it is important to set your intended encoding depending on how the file will be used.)

What you’re confronting is the difference between how Windows detects UTF-8 (must have a byte order mark) and how Notepad++ detects UTF-8 (valid UTF-8 byte sequence, which is statistically highly unlikely to be a legacy encoding).

There is no good solution to this without inventing a time machine and changing decisions that were made over three decades ago.

Well… no good solution that does not sacrifice reasonable backward compatibility. I consider that one of Windows’ best features, and I admire Microsoft for sticking to it. Twenty-year old programs can still run on current versions of Windows. I hate the culture of “If it’s not constantly maintained and upgraded, junk it!” that’s overtaken most of the computing world. A job once done well should stay done. (I suspect this has a lot to do with Microsoft’s dominance in business applications.) Not everyone shares my view.

-

T Terry R referenced this topic on

T Terry R referenced this topic on